Databricks on Azure with Terraform

Brendan Thompson • 4 August 2021 • 15 min read

Overview#

This post aims to provide a walk-through of how to deploy a Databricks cluster on Azure with its supporting infrastructure using Terraform. At the end of this post, you will have all the components required to be able to complete the Tutorial: Extract, transform, and load data by using Azure Databricks tutorial on the Microsoft website.

Once we have built out the infrastructure I will lay out the steps and results that the aforementioned tutorial goes through.

I will go through building out the Terraform code file by file, below are the files you will end up with:

.

├── aad.tf

├── databricks.tf

├── main.tf

├── network.tf

├── outputs.tf

├── providers.tf

├── synapse.tf

├── variables.tf

└── versions.tf

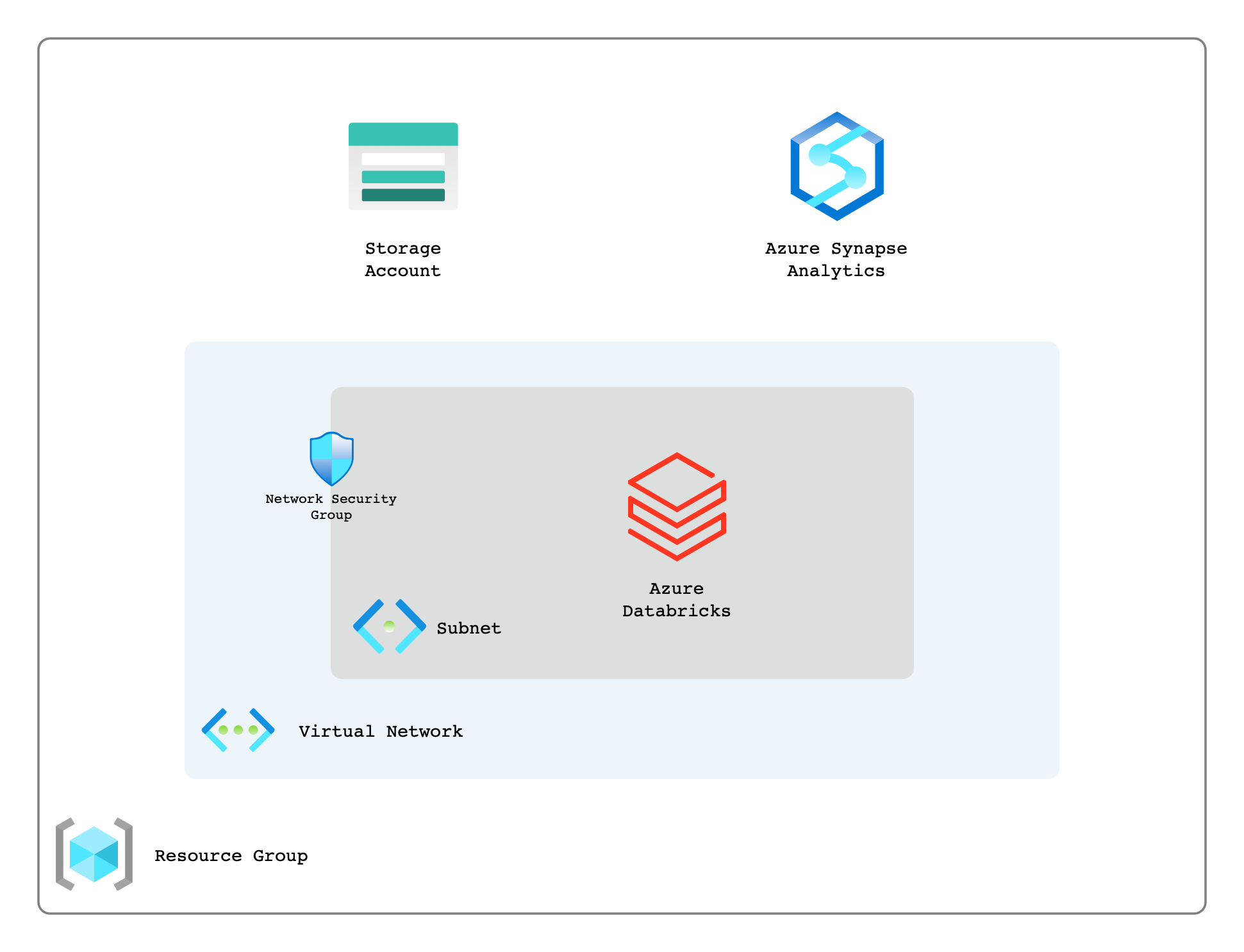

The below diagram outlines the high-level components that will be deployed in this post.

Providers#

A key file here is providers.tf, this is where we will declare the providers we require and any

configuration parameters that we need to pass to them.

provider "azurerm" {

features {}

}

provider "databricks" {

azure_workspace_resource_id = azurerm_databricks_workspace.this.id

}

As can be seen here we are setting the azurerm providers features attribute to be an empty

object, and telling databricks where to find the ID for the azurerm_databricks_workspace

resource.

Versions#

Another pretty important file in modern Terraform is versions.tf this is where we tell Terraform

what version of Terraform it must use as well constraints we may have about any providers.

For example, we may want to specify a provider version, or we may

be hosting a version/fork of the provider somewhere other than the default Terraform registry.

terraform {

required_version = ">= 1.0"

required_providers {

azurerm = {

source = "hashicorp/azurerm"

}

azuread = {

source = "hashicorp/azuread"

version = "~> 1.0"

}

databricks = {

source = "databrickslabs/databricks"

}

}

}

Variables#

Now that we have our providers and any constraints setup about our Terraform environment, it is time

to move onto what information we pass into our code, this is done by variables.tf.

variable "location" {

type = string

description = "(Optional) The location for resource deployment"

default = "australiaeast"

}

variable "environment" {

type = string

description = "(Required) Three character environment name"

validation {

condition = length(var.environment) <= 3

error_message = "Err: Environment cannot be longer than three characters."

}

}

variable "project" {

type = string

description = "(Required) The project name"

}

variable "databricks_sku" {

type = string

description = <<EOT

(Optional) The SKU to use for the databricks instance"

Default: standard

EOT

validation {

condition = can(regex("standard|premium|trial", var.databricks_sku))

error_message = "Err: Valid options are 'standard', 'premium' or 'trial'."

}

}

Above we have declared four variables, some of which are required and some that are optional. I find it good practice to mark a variable as optional or required in the description. On two of the variables validation has been added to ensure that what is being passed into our code is what Azure expects it to be. Doing this helps surface errors earlier.

Main#

main.tf is where anything that is foundational will usually live, an example of this for Azure is

broadly an azurerm_resource_group as this is likely to be consumed by any other code written. By

splitting out the code into different files it helps developers more easily understand what is going

on in the codebase.

In our main.tf as can be seen below we are declaring a few things.

locals {}– A local map that we can use to assist in naming our resourcesdata "azurerm_client_config" "current"- Gives us access to some properties about the client we are connected to Azure with such as;tenant_id,subscription_id, etcresource "azurerm_resource_group" "this" { ... }- Declares a resource group that we will use to store all our resources inresource "azurerm_key_vault" "this" { ... }- Where we will store any secrets and/or credentialsresource "azurerm_databricks_workspace" "this" { ... }- This is our actual Databricks instance that we will be utilizing later

locals {

naming = {

location = {

"australiaeast" = "aue"

}

}

}

data "azurerm_client_config" "current" {}

resource "azurerm_resource_group" "this" {

name = format("rg-%s-%s-%s",

local.naming.location[var.location], var.environment, var.project)

location = var.location

}

resource "azurerm_key_vault" "this" {

name = format("kv-%s-%s-%s",

local.naming.location[var.location], var.environment, var.project)

resource_group_name = azurerm_resource_group.this.name

location = azurerm_resource_group.this.location

tenant_id = data.azurerm_client_config.current.tenant_id

sku_name = "standard"

access_policy {

tenant_id = data.azurerm_client_config.current.tenant_id

object_id = data.azurerm_client_config.current.object_id

secret_permissions = [

"Get",

"Set",

"Delete",

"Recover",

"Purge"

]

}

}

resource "azurerm_databricks_workspace" "this" {

name = format("dbs-%s-%s-%s",

local.naming.location[var.location], var.environment, var.project)

resource_group_name = azurerm_resource_group.this.name

location = azurerm_resource_group.this.location

sku = var.databricks_sku

custom_parameters {

virtual_network_id = azurerm_virtual_network.this.id

public_subnet_name = azurerm_subnet.public.name

private_subnet_name = azurerm_subnet.private.name

}

depends_on = [

azurerm_subnet_network_security_group_association.public,

azurerm_subnet_network_security_group_association.private,

]

}

As can be seen above we use the following format() blocks to more consistently name our resources.

This will help give engineers more confidence in the naming of resources, and if there is standard

that is kept to across the platform it will enable them to more easily orientate themselves within

any environment on the platform.

format("<Resource_Identifier>-%s-%s-%s",

local.naming.location[var.location], var.environment, var.project)

Network#

Now that we have the code ready for our Databricks workspace we need to create the network as you

can see that we are referencing those in our main.tf file. The types of resources that we are

creating and their purpose are as follows;

azurerm_virtual_network- The virtual network (or VPC on AWS/GCP) that will be used to hold our subnets.azurerm_subnet- The subnets that will be associated with ourazurerm_databricks_workspaceazurerm_network_security_group- This is where any firewall type activity will be setup.azurerm_subnet_network_security_group_association- The association between the subnet and the network security group

resource "azurerm_virtual_network" "this" {

name = format("vn-%s-%s-%s",

local.naming.location[var.location], var.environment, var.project)

location = azurerm_resource_group.this.location

resource_group_name = azurerm_resource_group.this.name

address_space = ["10.0.0.0/16"]

}

resource "azurerm_subnet" "private" {

name = format("sn-%s-%s-%s-priv",

local.naming.location[var.location], var.environment, var.project)

resource_group_name = azurerm_resource_group.this.name

virtual_network_name = azurerm_virtual_network.this.name

address_prefixes = ["10.0.0.0/24"]

delegation {

name = "databricks-delegation"

service_delegation {

name = "Microsoft.Databricks/workspaces"

actions = [

"Microsoft.Network/virtualNetworks/subnets/join/action",

"Microsoft.Network/virtualNetworks/subnets/prepareNetworkPolicies/action",

"Microsoft.Network/virtualNetworks/subnets/unprepareNetworkPolicies/action",

]

}

}

}

resource "azurerm_network_security_group" "private" {

name = format("nsg-%s-%s-%s-priv",

local.naming.location[var.location], var.environment, var.project)

resource_group_name = azurerm_resource_group.this.name

location = azurerm_resource_group.this.location

}

resource "azurerm_subnet_network_security_group_association" "private" {

subnet_id = azurerm_subnet.private.id

network_security_group_id = azurerm_network_security_group.private.id

}

resource "azurerm_subnet" "public" {

name = format("sn-%s-%s-%s-pub",

local.naming.location[var.location], var.environment, var.project)

resource_group_name = azurerm_resource_group.this.name

virtual_network_name = azurerm_virtual_network.this.name

address_prefixes = ["10.0.1.0/24"]

delegation {

name = "databricks-delegation"

service_delegation {

name = "Microsoft.Databricks/workspaces"

actions = [

"Microsoft.Network/virtualNetworks/subnets/join/action",

"Microsoft.Network/virtualNetworks/subnets/prepareNetworkPolicies/action",

"Microsoft.Network/virtualNetworks/subnets/unprepareNetworkPolicies/action",

]

}

}

}

resource "azurerm_network_security_group" "public" {

name = format("nsg-%s-%s-%s-pub",

local.naming.location[var.location], var.environment, var.project)

resource_group_name = azurerm_resource_group.this.name

location = azurerm_resource_group.this.location

}

resource "azurerm_subnet_network_security_group_association" "public" {

subnet_id = azurerm_subnet.public.id

network_security_group_id = azurerm_network_security_group.public.id

}

The network sizes declared above are rather large and ideally should not be used in any production environment, however, they are perfectly fine for development or proof of concept work as long as the network is never peered or connected to an express route where it might conflict with internal ranges.

- Delegation must be set on the subnets for the resource type

Microsoft.Databricks/workspaces - Network security groups must be associated with the subnets. It is suggested that the NSGs are empty as Databricks will assign the appropriate rules.

- In order to prevent an error about Network Intent Policy there must be an explicit

dependsOnbetween the network security group associations and the Databricks workspace.

Azure Active Directory (AAD)#

Before we look into creating the internals of our Databricks instance or our Azure Synapse database we must first create the Azure Active Directory (AAD) application that will be used for authentication.

resource "random_password" "service_principal" {

length = 16

special = true

}

resource "azurerm_key_vault_secret" "service_principal" {

name = "service-principal-password"

value = random_password.service_principal.result

key_vault_id = azurerm_key_vault.this.id

}

resource "azuread_application" "this" {

display_name = format("app-%s-%s-%s",

local.naming.location[var.location], var.environment, var.project)

}

resource "azuread_service_principal" "this" {

application_id = azuread_application.this.application_id

app_role_assignment_required = false

}

resource "azuread_service_principal_password" "this" {

service_principal_id = azuread_service_principal.this.id

value = azurerm_key_vault_secret.service_principal.value

}

resource "azurerm_role_assignment" "this" {

scope = azurerm_storage_account.this.id

role_definition_name = "Storage Blob Data Contributor"

principal_id = azuread_service_principal.this.object_id

}

Above we are creating resources with the following properties:

random_password- A randomly generated password that will be assigned to our service principalazurerm_key_vault_secret- A secret that will be used to store the password for the service principalazuread_application- A new Azure Active Directory (AAD) application that will be used for the service principalazuread_service_principal- A new service principal that will be used for authentication on our Synapse instanceazuread_service_principal_password- The password that will be associated with the service principal, this is what we stored in the key vault earlierazurerm_role_assignment- A new role assignment that will be used to grant the service principal the ability to access and manage a storage account for Synapse

Synapse#

Now that we have our credentials all ready to go we can setup the Synapse instance, as well as any ancillary resources that we might need.

resource "azurerm_storage_account" "this" {

name = format("sa%s%s%s",

local.naming.location[var.location], var.environment, var.project)

resource_group_name = azurerm_resource_group.this.name

location = azurerm_resource_group.this.location

account_tier = "Standard"

account_replication_type = "LRS"

account_kind = "BlobStorage"

}

resource "azurerm_storage_data_lake_gen2_filesystem" "this" {

name = format("fs%s%s%s",

local.naming.location[var.location], var.environment, var.project)

storage_account_id = azurerm_storage_account.this.id

}

resource "azurerm_key_vault_secret" "sql_administrator_login" {

name = "sql-administrator-login"

value = "sqladmin"

key_vault_id = azurerm_key_vault.this.id

}

resource "random_password" "sql_administrator_login" {

length = 16

special = false

}

resource "azurerm_key_vault_secret" "sql_administrator_login_password" {

name = "sql-administrator-login-password"

value = random_password.sql_administrator_login.result

key_vault_id = azurerm_key_vault.this.id

}

resource "azurerm_synapse_workspace" "this" {

name = format("ws-%s-%s-%s",

local.naming.location[var.location], var.environment, var.project)

resource_group_name = azurerm_resource_group.this.name

location = azurerm_resource_group.this.location

storage_data_lake_gen2_filesystem_id = azurerm_storage_data_lake_gen2_filesystem.this.id

aad_admin = [

{

login = "AzureAD Admin"

object_id = azuread_service_principal.this.object_id

tenant_id = data.azurerm_client_config.current.tenant_id

}

]

sql_administrator_login = azurerm_key_vault_secret.sql_administrator_login.value

sql_administrator_login_password = azurerm_key_vault_secret.sql_administrator_login_password.value

}

resource "azurerm_synapse_sql_pool" "this" {

name = format("pool_%s",

var.project)

synapse_workspace_id = azurerm_synapse_workspace.this.id

sku_name = "DW100c"

create_mode = "Default"

}

resource "azurerm_synapse_firewall_rule" "allow_azure_services" {

name = "AllowAllWindowsAzureIps"

synapse_workspace_id = azurerm_synapse_workspace.this.id

start_ip_address = "0.0.0.0"

end_ip_address = "0.0.0.0"

}

As per usual we will go through each of the resources being created and explain what they do.

azurerm_storage_account- A new storage account that will be used as a storage and ingestion point for Synapseazurerm_storage_data_lake_gen2_filesystem- A container within our storage account that will actually house the data for Synapseazurerm_key_vault_secret- Secrets that will store the SQL administrator login and passwordrandom_password- A randomly generated password that will be assigned to our SQL administrator loginazurerm_synapse_workspace- The synapse workspace which gives engineers the ability to deal with their data requirementsazurerm_synapse_sql_pool- A new SQL pool that will be used to store Synapse dataazurerm_synapse_firewall_rule- A new firewall rule that will allow all traffic from Azure services

Databricks#

Finally from a resource creation perspective we need to setup the internals of the Databricks instance. This mostly entails creating a single node Databricks cluster where Notebooks etc can be created by Data Engineers.

data "databricks_node_type" "smallest" {

local_disk = true

depends_on = [

azurerm_databricks_workspace.this

]

}

data "databricks_spark_version" "latest_lts" {

long_term_support = true

depends_on = [

azurerm_databricks_workspace.this

]

}

resource "databricks_cluster" "this" {

cluster_name = format("dbsc-%s-%s-%s",

local.naming.location[var.location], var.environment, var.project)

spark_version = data.databricks_spark_version.latest_lts.id

node_type_id = data.databricks_node_type.smallest.id

autotermination_minutes = 20

spark_conf = {

"spark.databricks.cluster.profile" : "singleNode"

"spark.master" : "local[*]"

}

custom_tags = {

"ResourceClass" = "SingleNode"

}

}

Outputs#

The last thing we will need to write in Terraform will be our outputs.tf, this is the information

we want returned to us once the deployment of all the previous code is complete.

output "azure_details" {

sensitive = true

value = {

tenant_id = data.azurerm_client_config.current.tenant_id

client_id = azuread_application.this.application_id

client_secret = azurerm_key_vault_secret.service_principal.value

}

}

output "storage_account" {

sensitive = true

value = {

name = azurerm_storage_account.this.name

container_name = azurerm_storage_data_lake_gen2_filesystem.this.name

access_key = azurerm_storage_account.this.primary_access_key

}

}

output "synapse" {

sensitive = true

value = {

database = azurerm_synapse_sql_pool.this.name

host = azurerm_synapse_workspace.this.connectivity_endpoints

user = azurerm_synapse_workspace.this.sql_administrator_login

password = azurerm_synapse_workspace.this.sql_administrator_login_password

}

}

In this we are simply outputting information such as our Service Principal details, information about our storage account and Synapse instance and how to authenticate to them.

Deploying the environment#

Now that we have all the pieces ready for us to use we can deploy it. This assumes that the files are all in your local directory and that you have Terraform installed.

- Firstly we will need to initialize terraform and pull down all the providers

terraform init

- Plan the deployment

terraform plan -var="environment=dev" -var="project=meow"

- Apply the deployment

terraform apply -var="environment=dev" -var="project=meow"

Running an ETL in Databricks#

Now that we have our environment deployed we can run through the ETL tutorial from Microsoft I linked at the top of this page.

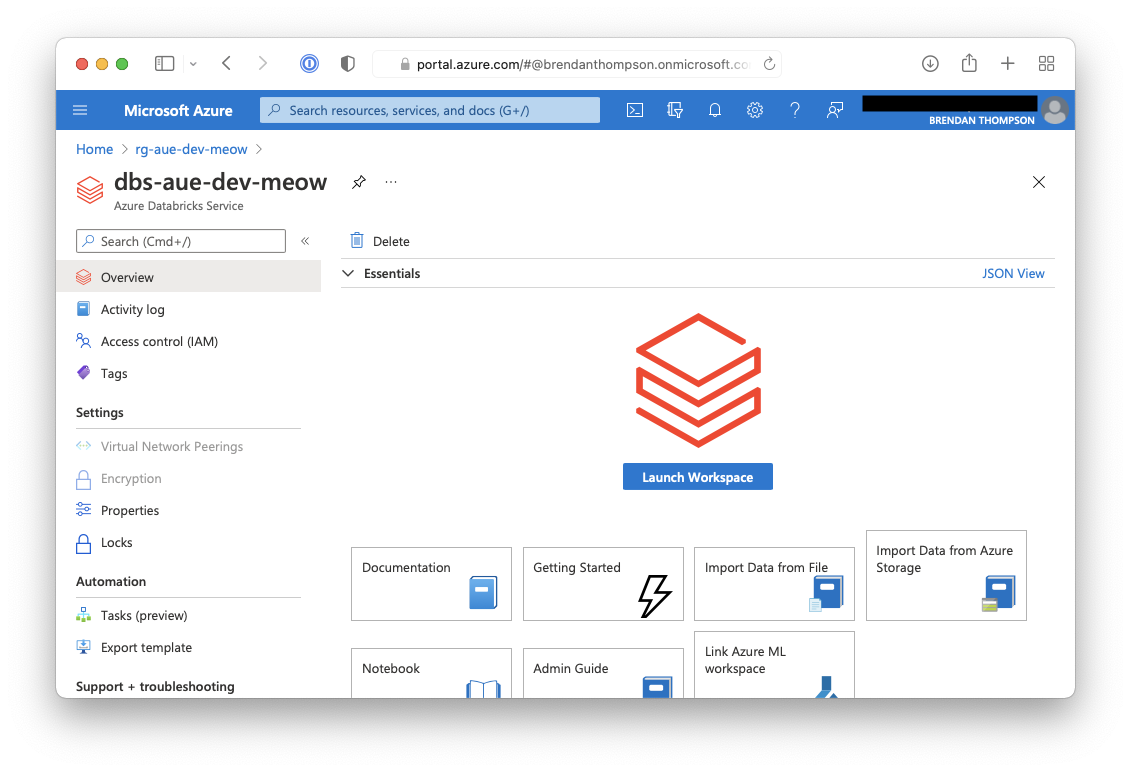

From the Azure portal within the Databricks resource click on Launch Workspace

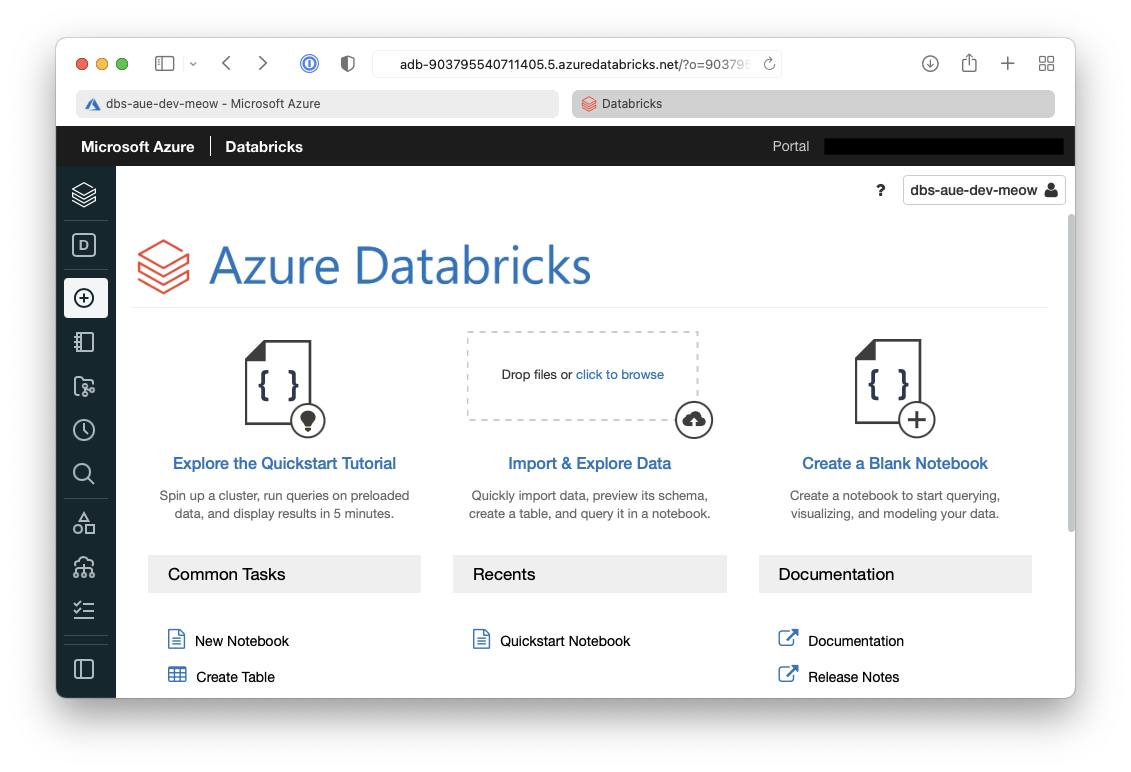

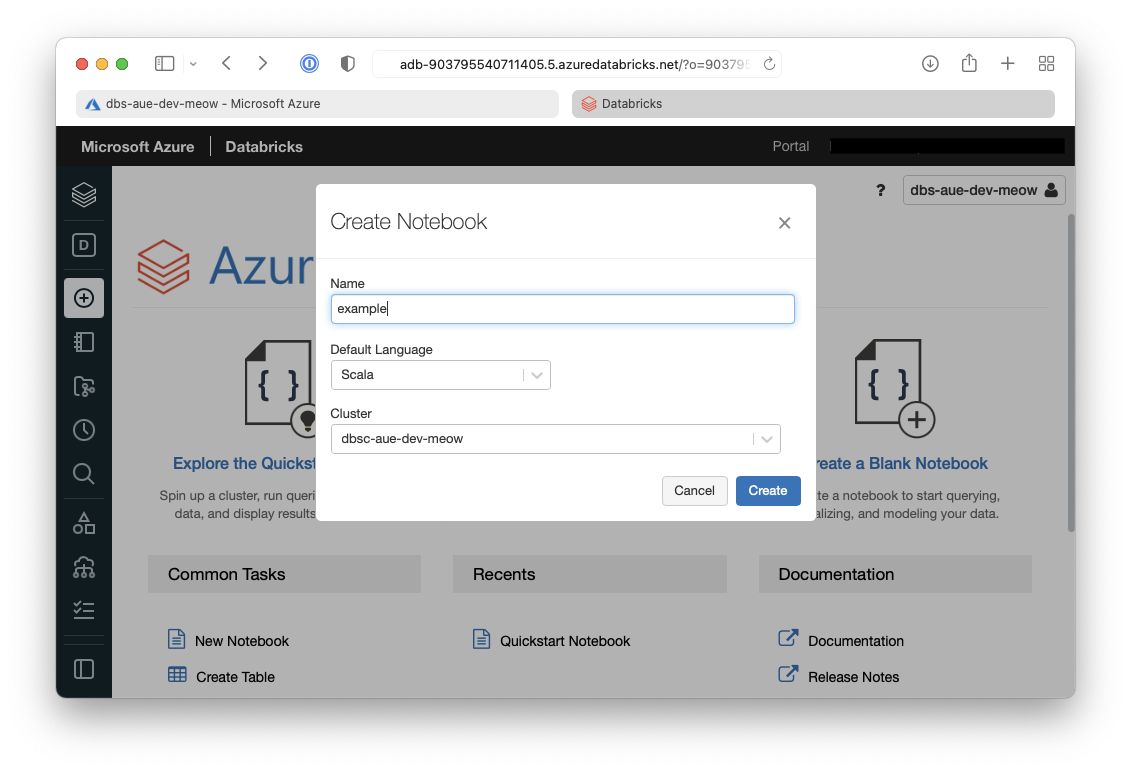

On the Databricks summary page click on New notebook

On the open dialogue give the notebook a name, select

Scalaand then select the cluster we just created

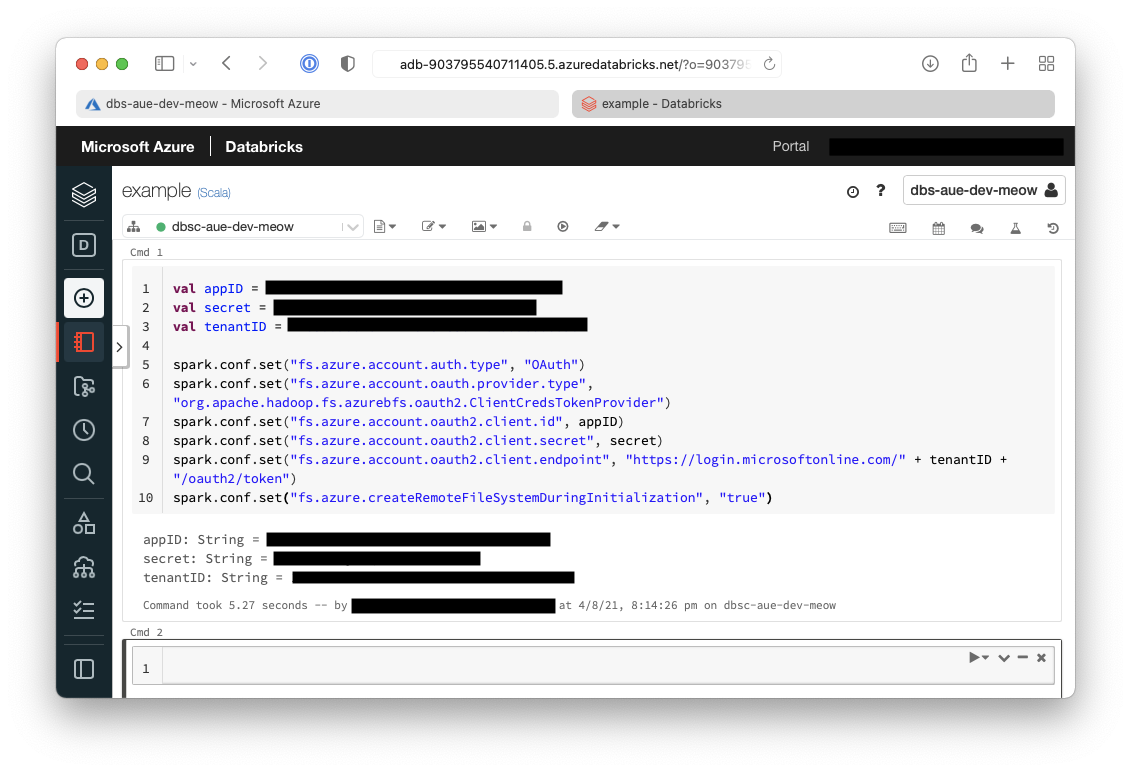

From within the notebook in the first Cell but in the following code which will setup the session configuration

val appID = "<appID>"

val secret = "<secret>"

val tenantID = "<tenant-id>"

spark.conf.set("fs.azure.account.auth.type", "OAuth")

spark.conf.set("fs.azure.account.oauth.provider.type", "org.apache.hadoop.fs.azurebfs.oauth2.ClientCredsTokenProvider")

spark.conf.set("fs.azure.account.oauth2.client.id", "<appID>")

spark.conf.set("fs.azure.account.oauth2.client.secret", "<secret>")

spark.conf.set("fs.azure.account.oauth2.client.endpoint", "https://login.microsoftonline.com/<tenant-id>/oauth2/token")

spark.conf.set("fs.azure.createRemoteFileSystemDuringInitialization", "true")

The result of the above command is below, and it shows the configuration has been written.

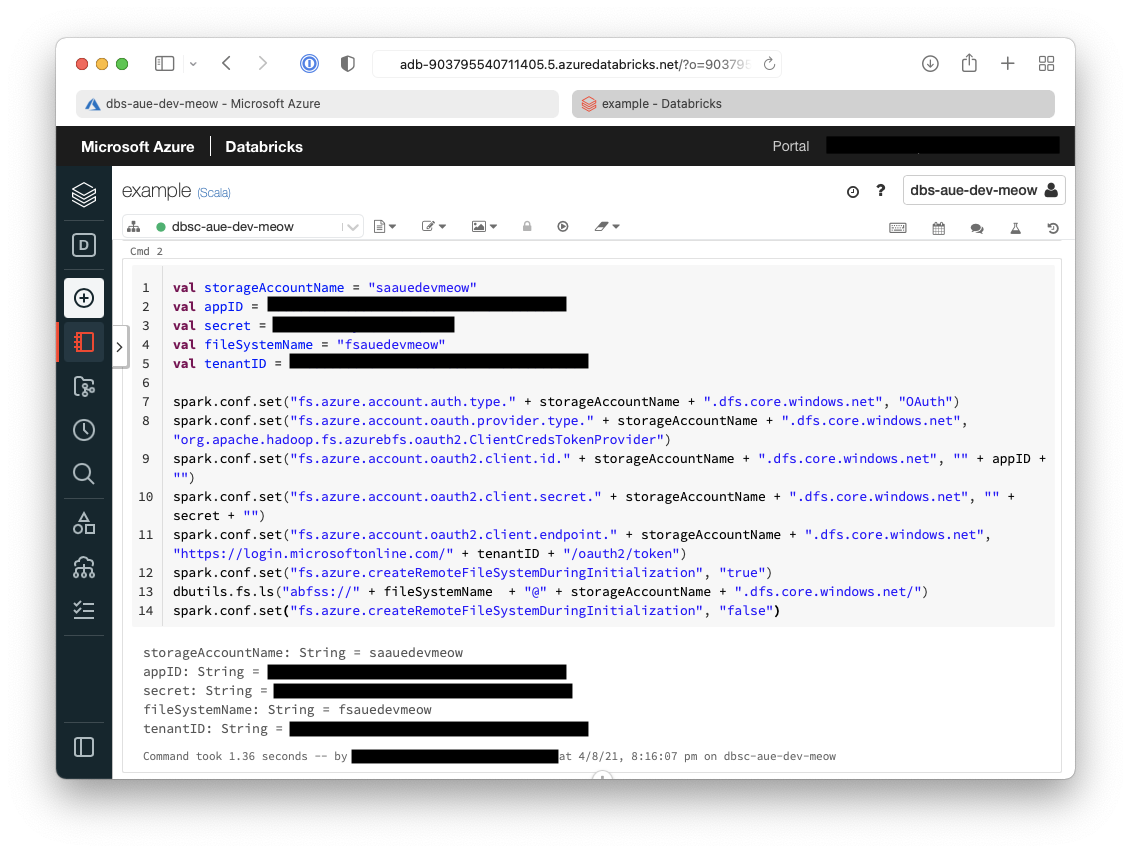

- In the next cell we will setup the account configuration to allow connectivity to our storage accounts

val storageAccountName = "<storage-account-name>"

val appID = "<app-id>"

val secret = "<secret>"

val fileSystemName = "<file-system-name>"

val tenantID = "<tenant-id>"

spark.conf.set("fs.azure.account.auth.type." + storageAccountName + ".dfs.core.windows.net", "OAuth")

spark.conf.set("fs.azure.account.oauth.provider.type." + storageAccountName + ".dfs.core.windows.net", "org.apache.hadoop.fs.azurebfs.oauth2.ClientCredsTokenProvider")

spark.conf.set("fs.azure.account.oauth2.client.id." + storageAccountName + ".dfs.core.windows.net", "" + appID + "")

spark.conf.set("fs.azure.account.oauth2.client.secret." + storageAccountName + ".dfs.core.windows.net", "" + secret + "")

spark.conf.set("fs.azure.account.oauth2.client.endpoint." + storageAccountName + ".dfs.core.windows.net", "https://login.microsoftonline.com/" + tenantID + "/oauth2/token")

spark.conf.set("fs.azure.createRemoteFileSystemDuringInitialization", "true")

dbutils.fs.ls("abfss://" + fileSystemName + "@" + storageAccountName + ".dfs.core.windows.net/")

spark.conf.set("fs.azure.createRemoteFileSystemDuringInitialization", "false")

We can see below that the account configuration has also been written.

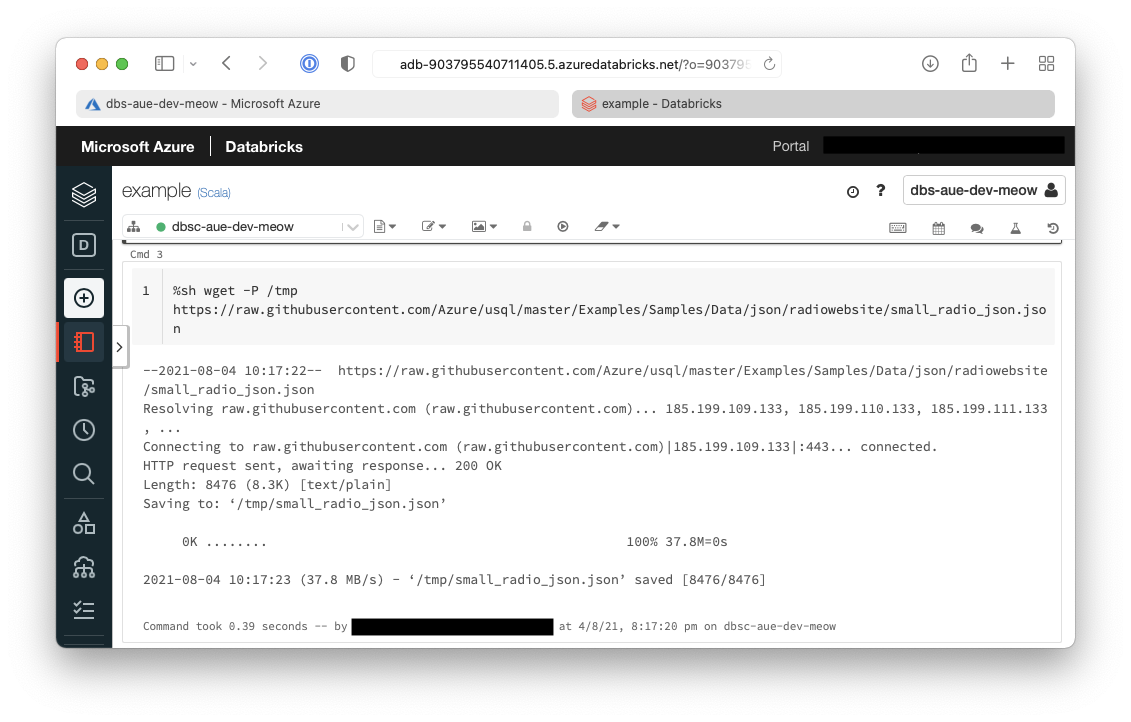

- Now the sample data needs to be downloaded onto the cluster

%sh wget -P /tmp https://raw.githubusercontent.com/Azure/usql/master/Examples/Samples/Data/json/radiowebsite/small_radio_json.json

It can be seen below that the file has been downloaded to temporary storage on the cluster.

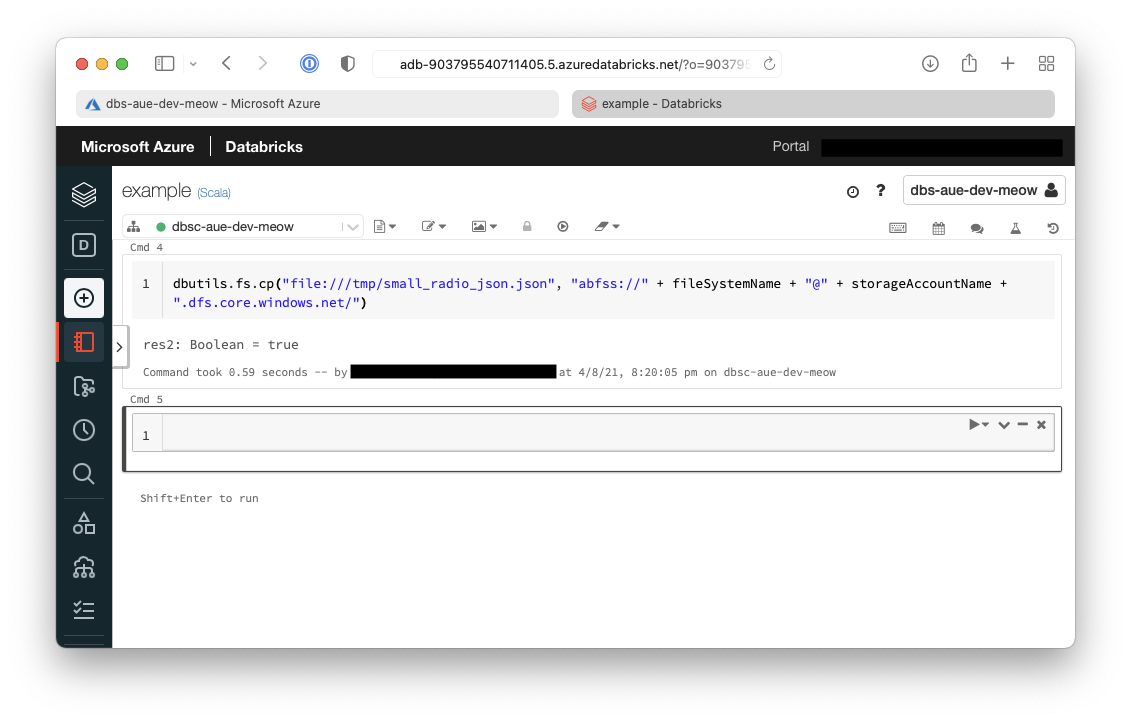

- Now we need to transfer from the cluster to the Azure Data Lake Storage (ADLS)

dbutils.fs.cp("file:///tmp/small_radio_json.json", "abfss://" + fileSystemName + "@" + storageAccountName + ".dfs.core.windows.net/")

The result of this command can be seen below as true.

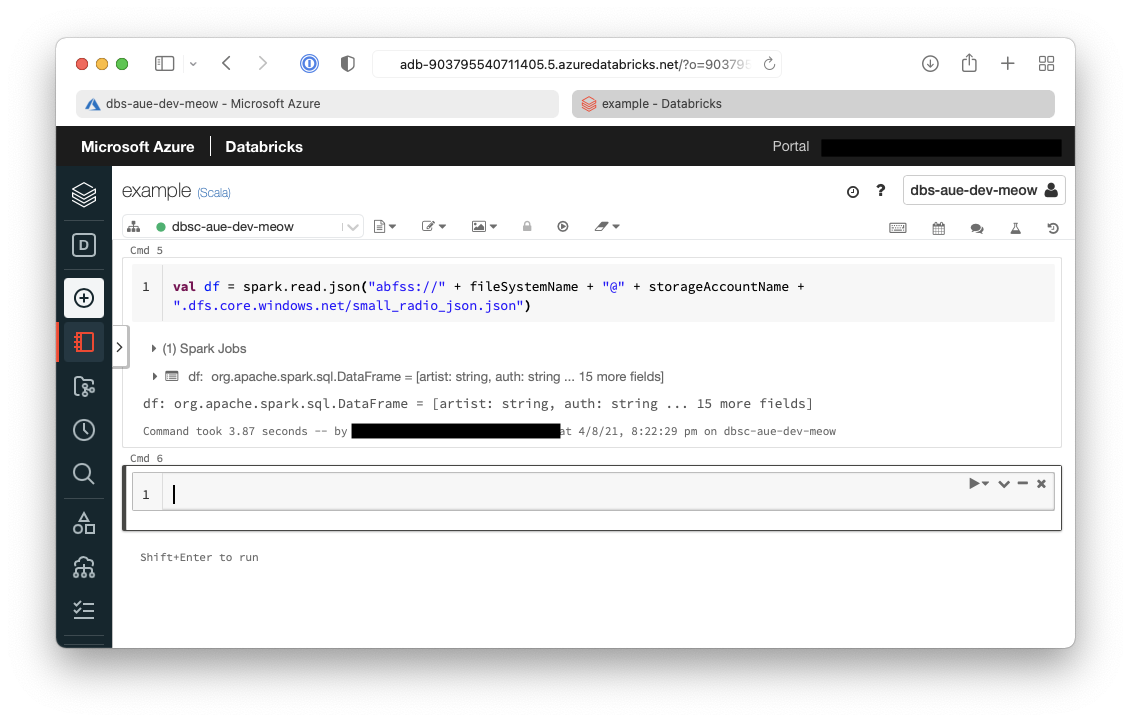

- Extract the data from ADLS into a dataframe

val df = spark.read.json("abfss://" + fileSystemName + "@" + storageAccountName + ".dfs.core.windows.net/small_radio_json.json")

From the result of the above command we can see that the data is now in a dataframe.

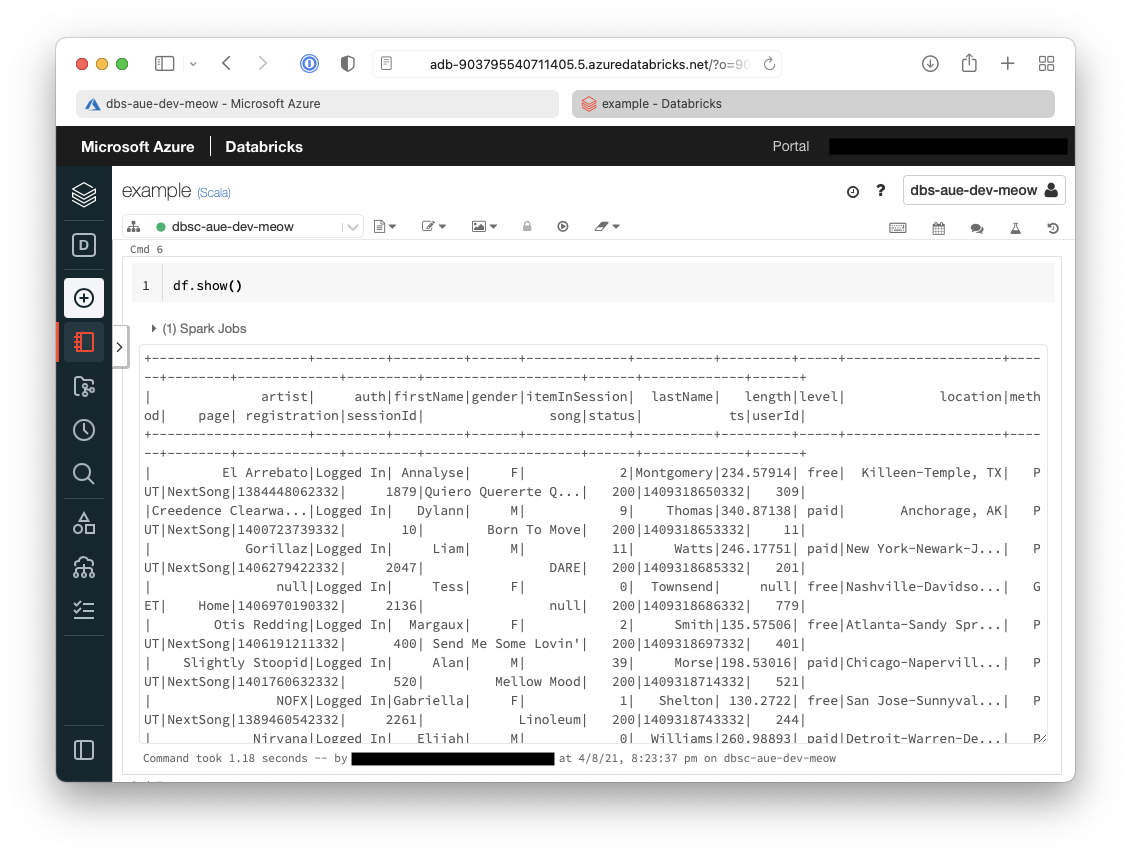

- Show the contents of the dataframe

df.show()

Below it can be seen that the dataframe contains the data from the sample file.

display(df)

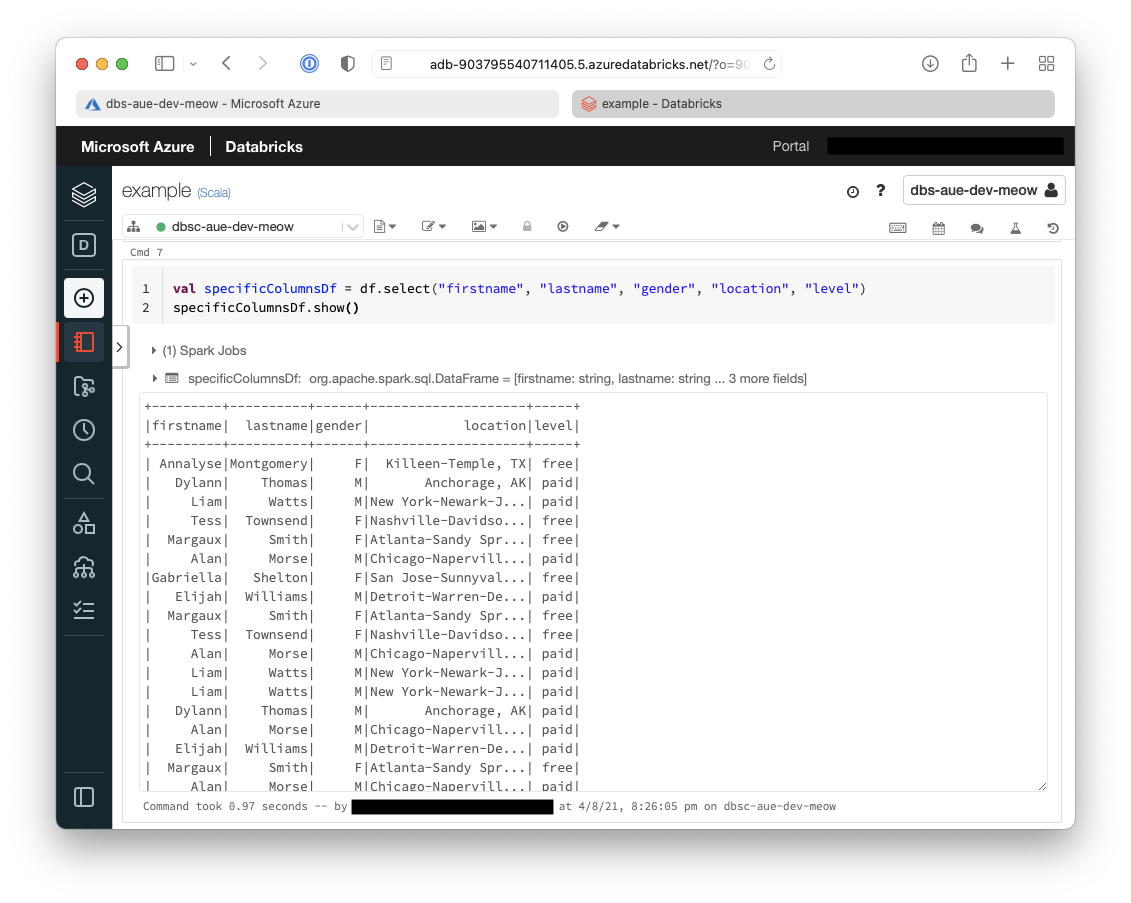

- The first basic transformation we will do is to only retrieve specific columns from the originally extracted dataframe

val specificColumnsDf = df.select("firstname", "lastname", "gender", "location", "level")

specificColumnsDf.show()

You can see below that the only columns that are now returned in the dataframe are:

firstnamelastnamegenderlocationlevel

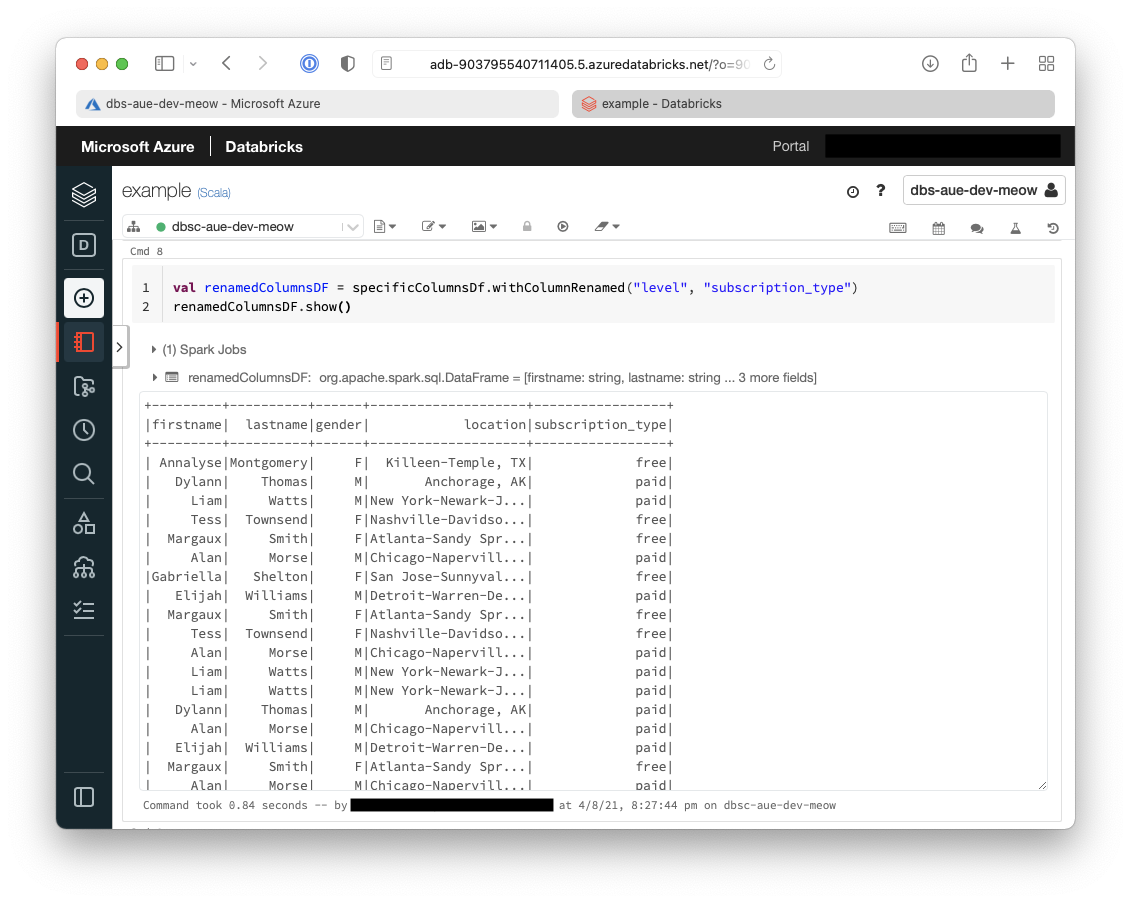

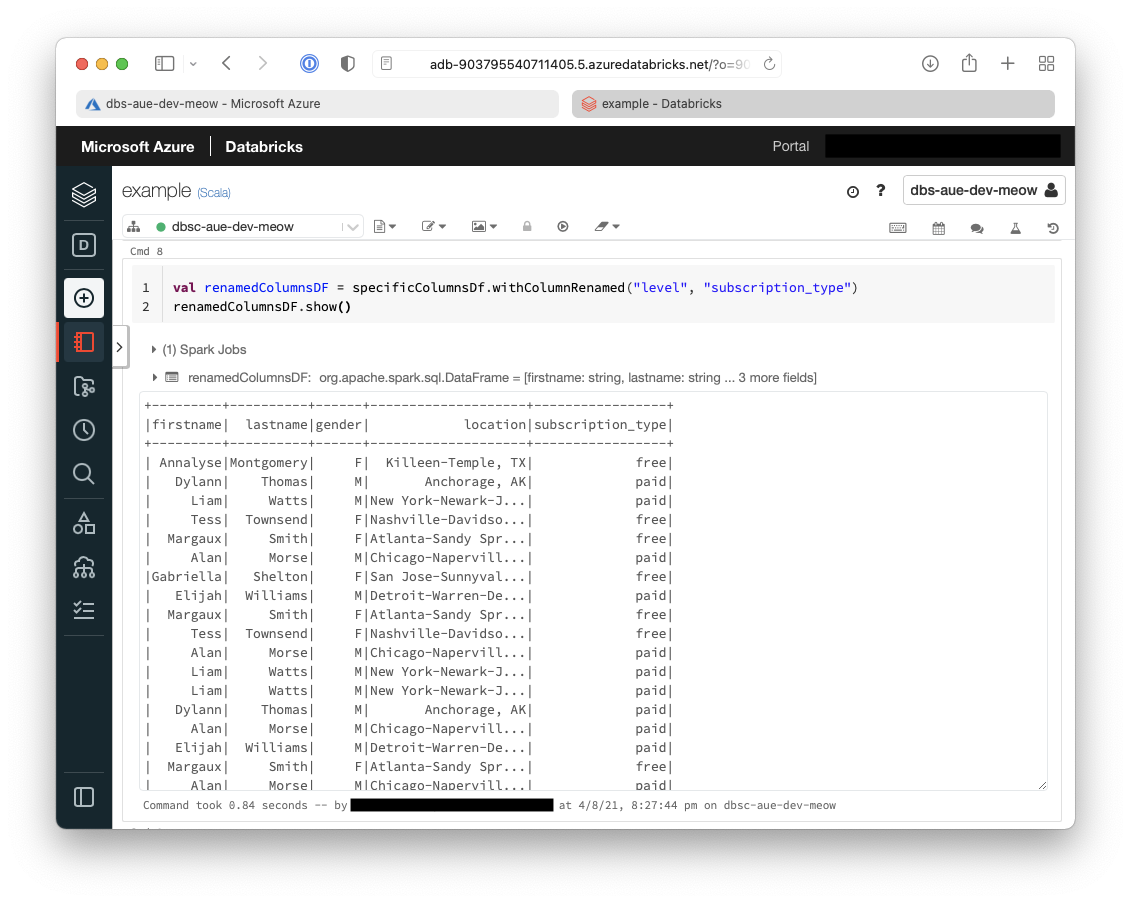

- The second transformation that we will do is to rename some columns

val renamedColumnsDF = specificColumnsDf.withColumnRenamed("level", "subscription_type")

renamedColumnsDF.show()

Below shows that the second transformation we have performed is to rename the column level to

subscription_type.

- Now we are going to load that transformed dataframe into Azure Synapse for later use

// Storage Account

val blobStorage = "<blob-storage-account-name>.blob.core.windows.net"

val blobContainer = "<blob-container-name>"

val blobAccessKey = "<access-key>"

val tempDir = "wasbs://" + blobContainer + "@" + blobStorage +"/tempDirs"

val acntInfo = "fs.azure.account.key."+ blobStorage

sc.hadoopConfiguration.set(acntInfo, blobAccessKey)

// Azure Synapse

val dwDatabase = "<database-name>"

val dwServer = "<database-server-name>"

val dwUser = "<user-name>"

val dwPass = "<password>"

val dwJdbcPort = "1433"

val dwJdbcExtraOptions = "encrypt=true;trustServerCertificate=true;hostNameInCertificate=*.database.windows.net;loginTimeout=30;"

val sqlDwUrl = "jdbc:sqlserver://" + dwServer + ":" + dwJdbcPort + ";database=" + dwDatabase + ";user=" + dwUser+";password=" + dwPass + ";$dwJdbcExtraOptions"

val sqlDwUrlSmall = "jdbc:sqlserver://" + dwServer + ":" + dwJdbcPort + ";database=" + dwDatabase + ";user=" + dwUser+";password=" + dwPass

spark.conf.set(

"spark.sql.parquet.writeLegacyFormat",

"true")

renamedColumnsDF.write.format("com.databricks.spark.sqldw")

.option("url", sqlDwUrlSmall)

.option("dbtable", "SampleTable")

.option( "forward_spark_azure_storage_credentials","True")

.option("tempdir", tempDir)

.mode("overwrite")

.save()

This last screenshot shows that the data has been pushed over to our Azure Synapse instance.