Getting started with Terratest on Azure

Brendan Thompson • 17 September 2021 • 19 min read

During my time working with Terraform over the years I find one thing that is always forgotten when writing a module is testing! I feel like this is a problem in a lot of development scenarios and is especially prevalent when the Developer comes from an infrastructure or cloud engineering background. After many conversations with colleagues it has become clear to me that there are not that many good guides on how to get started with testing Terraform code, even more so on Azure.

This blog post aims to show you how to get started testing your Terraform code with terratest on the Azure cloud. When I started thinking about what module I would use to show this I opted for Databricks, as I had recently written a post around Databricks (which you can read here) from a development perspective. In hindsight this was a foolish decision as deploying Databricks on Azure takes a bit of time!

I won't focus too much on the Terraform code itself here as this was covered off by my Databricks on Azure blog post. We will go through the following aspects:

- Locating and using the Terraform module for Databricks

- Creating the fixture

- Writing Terraform tests using terratest:

- Unit tests

- Integration tests

- Executing the tests

- Creating a pipeline to run the tests using GitHub Actions

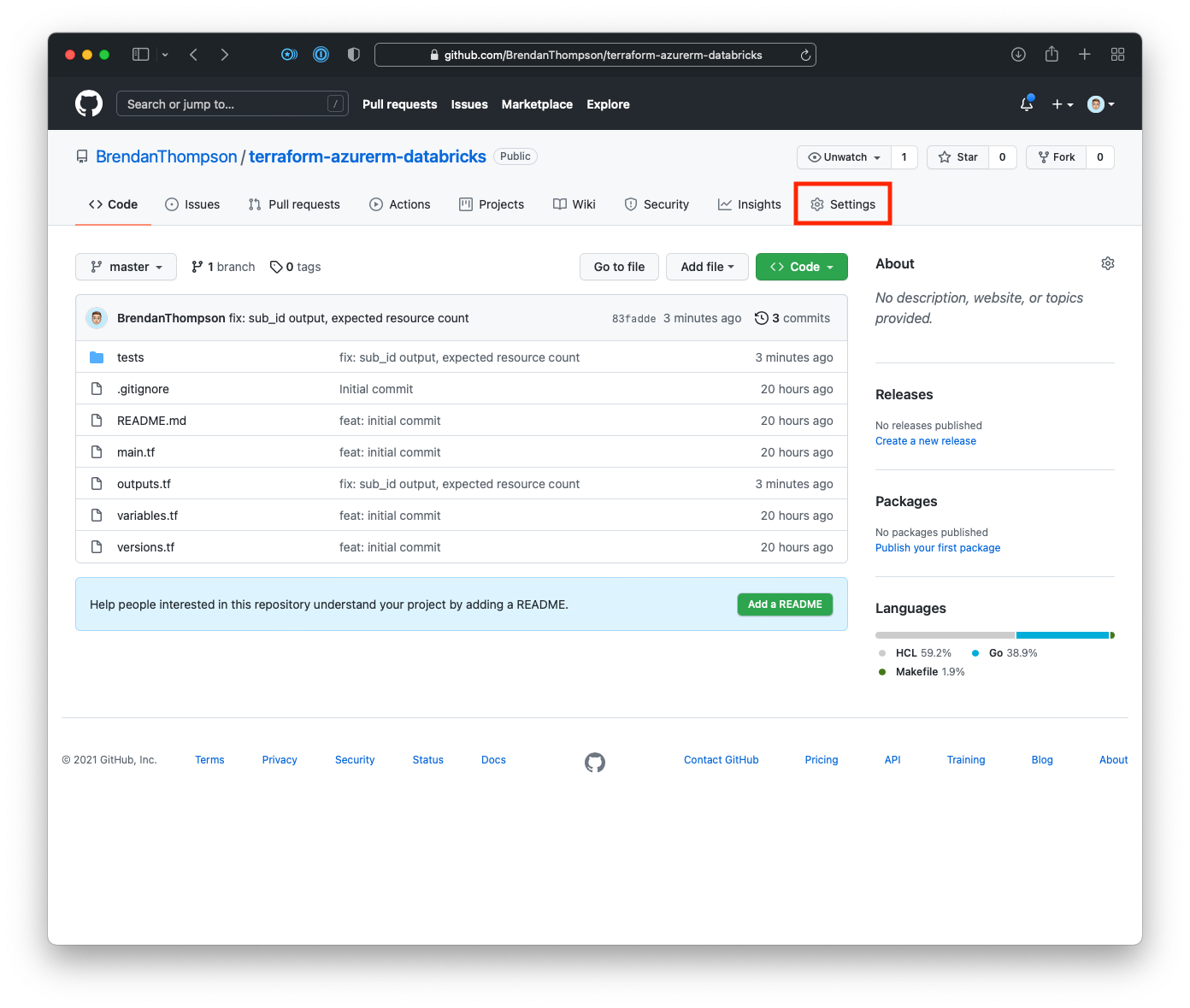

Below is a link the GitHub repository for the Terraform module and the tests that we will write in this post.

http://github.comBrendanThompson/terraform-azurerm-databricks

Environment Setup#

First we need to ensure that we have Go installed on our machine, as well as Terraform.

Creating the fixtures#

The fixtures are calling the Terraform module we have created, and adding in any additional resources that we might require.

Below is the main.tf which contains the module call with a path based source, as well as the

declaration for the azurerm provider.

provider "azurerm" {

features {}

}

module "databricks" {

source = "../../"

suffix = "aue-dev-blt"

}

Writing the tests#

In this section we will go through the two types of testing we are going to implement for our module;

Unit Testing and Integration Testing. Before we delve into

that detail however we will setup a Makefile to help us more easily run tests locally.

This Makefile is very simple, we will declare a variable to override the default timeout of our

go tests and then setup three commands for us to run with.

unit-test– runs the unit tests based on theunitbuild constraintsintegration-test– runs the integration tests based on theintegrationbuild constraintstest- runs both unit and integration tests

timeout = 60m

unit: unit-test

unit-test:

go test -timeout $(timeout) -tags=unit -v

integration: integration-test

integration-test:

go test -timeout $(timeout) -tags=integration -v

test: unit-test integration-test

Before we create any test files we need to set ourselves up a a go module, this can be done with

the following command:

go mod init tests

This will create two files for us go.mod and go.sum which are required in order for us to be

able to execute a go binary outside of the GOPATH.

go test timeout as some Azure resources can take in excess of 45-minutes to create. And does not include the running of the test itself, nor destruction of the resource. In the example I have set this timeout to be 60-minutes as the will ensure we have enough time to create, test, and destroy the resources in our Databricks module.

Unit Testing#

There is not really a concept for Unit Testing in Terraform, however if we write terratests that use the Terraform Plan rather than the Terraform Apply in my opinion could be considered a unit test. The reason being that a plan does not actually create any resources, this means that we can validate simple things before the resources themselves are created.

For this I have created a file called terraform_databricks_unit_test.go, I will go through each of

the parts of this file and explain what each section is doing.

The first line in the file is where we declare our Build Constraint letting

go know that this file, specifically is a unit test.

//go:build unit

The next portion we have is our package declaration and import statement. For the package name I have opted for the rather self-explanatory name of test as that is what the package is doing.

The unit tests are using the following packages:

regexp- this allows us to built and use regular expressions within out testsstrconv- this allows us to convert strings to integers, and vice versatesting- this allows us to declare and run test casesgithub.com/stretchr/testify/assert- this allows us to assert that out test criteria is evaluating to the expected resultgithub.com/gruntwork-io/terratest/modules/terraform- this package allows us to access and execute The Terraform commands

package test

import (

"regexp"

"strconv"

"testing"

"github.com/gruntwork-io/terratest/modules/terraform"

"github.com/stretchr/testify/assert"

)

We are only going to declare a single const and that is to be used to instruct Terraform where

our fixtures we created earlier are.

const (

fixtures = "./fixtures"

)

Now we are onto the meatier part of our unit test, the actual test itself. In this particular instance we are looking to ensure that the number of resources that are to be created/changed/destroyed are as expected.

First off, we need to setup some Terraform options that the terratest Terraform module will use to

run the fixture code. In this case we are telling Terraform to use the fixtures directory as the

root directory for the command, and that we do not want the results to contain colour.

The next step is to run the Terraform plan itself, this would be done via using the terraform plan

command if we were running the fixture code ourselves. Once we have a plan we are ensuring that it

is not empty before we attempt any further validation. If the plan is empty the test would fail.

Every Terraform plan has a line -right at the end- that gives the operator an overview of what is happening, we are going to construct a regular expression that will match the line and use that result to measure success. As you can see we are using table-driven tests to validate each component of the plan summary.

func TestAzureResources(t *testing.T) {

opts := &terraform.Options{

TerraformDir: fixtures,

NoColor: true,

}

plan := terraform.InitAndPlan(t, opts)

assert.NotEmpty(t, plan)

re := regexp.MustCompile(`Plan: (\d+) to add, (\d+) to change, (\d+) to destroy.`)

planResult := re.FindStringSubmatch(plan)

testCases := []struct {

name string

got string

want int

}{

{"created", planResult[1], 13},

{"changed", planResult[2], 0},

{"destroyed", planResult[3], 0},

}

for _, tc := range testCases {

t.Run(tc.name, func(t *testing.T) {

got, err := strconv.Atoi(tc.got)

if err != nil {

t.Errorf("Unable to convert string to int")

}

assert.Equal(t, got, tc.want)

})

}

}

Based on the fixture(s) that we wrote, in this instance we expect that there should be 13 resources

create, and 0 changed or destroyed. This test helps us validate that fact before we even attempt

to deploy any resources to Azure.

struct/json representation of it that we can use to interact with the it in a more meaningful way. I am hoping that in time HashiCorp will change this and allow for better use of the Plan.Integration Testing#

As with our Unit tests we are going to demarcate our integration testing go file

terraform_databricks_integration_test.go with a build constraint to ensure

that we are able to just run integration tests if we so wish.

//go:build integration

For our imports we no longer require regexp, or strconv we will however

add in another module from terratest so that we are able to interact with Azure resources directly.

This module is the github.com/gruntwork-io/terratest/modules/azure module.

package test

import (

"testing"

"github.com/gruntwork-io/terratest/modules/azure"

"github.com/gruntwork-io/terratest/modules/terraform"

"github.com/stretchr/testify/assert"

)

As with our Unit tests we only need a single const to instruct Terraform where

our fixtures we created earlier are.

const (

fixtures = "./fixtures"

)

For the integration tests I thought it would be useful to try and generalise the testing function

itself, in order to do this we require a way of telling each of the test cases what condition they

need to check on, for this I have created a type called TestCondition, and as you can see we have

two potential values for this.

The first TestConditionEquals we will use later to tell our code that we want to check equality on

the case, and for the second TestConditionNotEmpty we want to ensure that the returned value is not

empty.

type TestCondition int

const (

TestConditionEquals TestCondition = 0

TestConditionNotEmpty TestCondition = 1

)

Instead of just declaring the Terraform options in-line like we did previously we are going to use a function that returns them to us. Another option would have been to use a global variable, however I personally find it cleaner to use a function as it also means that if we wished we could override any of the options simple by passing in a value.

func IntegrationTestOptions() *terraform.Options {

return &terraform.Options{

TerraformDir: fixtures,

NoColor: true,

}

}

This is our main entrypoint into the tests, as I want to run a few tests here on different parts of

the infrastructure that we are deploying I have split that out into separate functions for ease of

reading as well as execution. The TestDatabricks function is doing the initialization of our

Terraform code, as well as deploying the resources. Further, once all the tests are complete it will

destruct the environment for us.

func TestDatabricks(t *testing.T) {

terraform.InitAndApply(t, IntegrationTestOptions())

defer terraform.Destroy(t, IntegrationTestOptions())

t.Run("Output Validation", OutputValidation)

t.Run("Key Vault Validation", KeyVaultValidation)

t.Run("Networks", Networks)

}

The first set of tests we want to run are simple validation tests to ensure that the values of our Terraform outputs are what we expect them to be. For instance; we want to ensure that the virtual network and subnets ranges are the same as what was passed into the code, we also want to ensure that the Databricks workspace URL is not empty. (If we were deploying a private workspace here we might want to validate that the workspace URL is not a public endpoint)

Again we are utilising table-driven tests for each of the test cases. We have

created ourselves an anonymous struct, the fields are:

Name- The name of the test caseGot- The value we got back from our Terraform outputsWant- The value we expect to getCondition- The condition we are checking against (e.g.TestConditionEquals)

Using a struct like this and the table-driven tests means that we do not have

to repeat the testing logic, we can simply using a switch statement in conjunction with the

TestCondition to validate our results in different ways. Another option to consider would be to

have the Got field be of type func () interface {}, and the Want field be interface{} this

allows us to customize the logic a lot more, whilst keeping the test case itself simple and lean.

func OutputValidation(t *testing.T) {

testCases := []struct {

Name string

Got string

Want string

Condition TestCondition

}{

{"Virtual Network Range", terraform.Output(t, IntegrationTestOptions(), "virtual_network"), "10.0.0.0/23", TestConditionEquals},

{"Private Subnet Range", terraform.Output(t, IntegrationTestOptions(), "private_subnet"), "10.0.0.0/24", TestConditionEquals},

{"Public Subnet Range", terraform.Output(t, IntegrationTestOptions(), "public_subnet"), "10.0.1.0/24", TestConditionEquals},

{"Workspace URL", terraform.Output(t, IntegrationTestOptions(), "databricks_workspace_url"), "", TestConditionNotEmpty},

}

for _, tc := range testCases {

t.Run(tc.Name, func(t *testing.T) {

switch tc.Condition {

case TestConditionEquals:

assert.Equal(t, tc.Got, tc.Want)

case TestConditionNotEmpty:

assert.NotEmpty(t, tc.Got)

}

})

}

}

Now that we have validated that the outputs are all as expected we can move on to checking some more important things in the environment, such as that the Key Vault exists and the customer managed key we want to use for our Databricks workspace is present.

We do this by making a call to the GetKeyVault() function from the terratest module for Azure,

this function returns us an instance of the Key Vault based on some attributes we pass in. Then we

use the KeyVaultKeyExists() function to ensure that our customer-managed key is present in the Key

Vault.

func KeyVaultValidation(t *testing.T) {

name := terraform.Output(t, IntegrationTestOptions(), "key_vault_name")

subscriptionID := terraform.Output(t, IntegrationTestOptions(), "subscription_id")

resourceGroup := terraform.Output(t, IntegrationTestOptions(), "resource_group_name")

keyVault := azure.GetKeyVault(t, resourceGroup, name, subscriptionID)

t.Log(name)

t.Log(subscriptionID)

t.Log(resourceGroup)

t.Log(keyVault)

t.Run("Key Vault Exists", func(t *testing.T) {

assert.Equal(t, *keyVault.Name, name)

})

t.Run("Key Vault Secret Exists", func(t *testing.T) {

assert.True(t, azure.KeyVaultKeyExists(t, name, "cmk"))

})

}

The final set of tests that I want to do for this module is to ensure that the subnet delegations on

the subnets associated with our Databricks workspace are present. Again this is pretty simple as

terratest has a function that we can utilise to retrieve this information via the GetSubnetE()

function. This returns us an instance of the Subnet from the Azure REST API which we can then use to

drill down into the details about the subnets delegations and ensure that they meet the requirement

of Microsoft.Databricks/workspaces.

We could do some more advanced validation here too, such as ensuring that the NSGs have relevant rules or that the Actions on the subnet delegations are correct.

func Networks(t *testing.T) {

virtualNetworkName := terraform.Output(t, IntegrationTestOptions(), "virtual_network_name")

privateSubnetName := terraform.Output(t, IntegrationTestOptions(), "private_subnet_name")

publicSubnetName := terraform.Output(t, IntegrationTestOptions(), "public_subnet_name")

resourceGroupName := terraform.Output(t, IntegrationTestOptions(), "resource_group_name")

subscriptionID := terraform.Output(t, IntegrationTestOptions(), "subscription_id")

t.Run("Private Subnet Delegations", func(t *testing.T) {

privateSubnet, err := azure.GetSubnetE(privateSubnetName, virtualNetworkName, resourceGroupName, subscriptionID)

if err != nil {

t.Fatal(err)

}

for _, p := range *privateSubnet.SubnetPropertiesFormat.Delegations {

assert.Equal(t, *p.ServiceDelegationPropertiesFormat.ServiceName, "Microsoft.Databricks/workspaces")

}

})

t.Run("Public Subnet Delegations", func(t *testing.T) {

publicSubnet, err := azure.GetSubnetE(publicSubnetName, virtualNetworkName, resourceGroupName, subscriptionID)

if err != nil {

t.Fatal(err)

}

t.Log(*publicSubnet.Name)

for _, p := range *publicSubnet.SubnetPropertiesFormat.Delegations {

assert.Equal(t, *p.ServiceDelegationPropertiesFormat.ServiceName, "Microsoft.Databricks/workspaces")

}

})

}

Local Test Execution#

Executing these tests locally is pretty easy, and thankfully we wrote the Makefile earlier which

means that the actual task of executing them couldn't be simpler.

In order to be able to run these tests, as we will be interacting with both the azurerm provider

and the Azure APIs we will need to authenticate with Azure. You could do this one of several ways,

including by authenticating to the Azure CLI. However, I will be using a Service Principal, as such

I will plumb my authentication details in via environment variables. Unfortunately because Microsoft

is a big fan of consistency we actually need to declare two sets of environment variables with the

same values. Instead of repeating them I am sourcing one from the other.

# Terraform Provider

export ARM_TENANT_ID="00000000-0000-0000-0000-000000000000"

export ARM_CLIENT_ID="00000000-0000-0000-0000-000000000000"

export ARM_CLIENT_SECRET="00000000-0000-0000-0000-000000000000"

export ARM_SUBSCRIPTION_ID="00000000-0000-0000-0000-000000000000"

# Golang Azure SDK

export AZURE_TENANT_ID=$ARM_TENANT_ID

export AZURE_CLIENT_ID=$ARM_CLIENT_ID

export AZURE_CLIENT_SECRET=$ARM_CLIENT_SECRET

export AZURE_SUBSCRIPTION_ID=$ARM_SUBSCRIPTION_ID

First off we want to kick off our unit tests, and we do what with:

make unit-test

If these tests run and pass you will see this at the end of the test:

=== RUN TestAzureResources/created

=== RUN TestAzureResources/changed

=== RUN TestAzureResources/destroyed

--- PASS: TestAzureResources (13.42s)

--- PASS: TestAzureResources/created (0.00s)

--- PASS: TestAzureResources/changed (0.00s)

--- PASS: TestAzureResources/destroyed (0.00s)

PASS

ok test 13.850s

Now we need to run the integration tests, and we do this with:

make integration-test

As these are going out and actually creating our resources in Azure it might take a hot-minute. Once the tests have finished running at the end of the log you will see the same test summary as per the above, this should look like the following if everything went well:

--- PASS: TestDatabricks (916.62s)

--- PASS: TestDatabricks/Output_Validation (7.50s)

--- PASS: TestDatabricks/Output_Validation/Virtual_Network_Range (0.00s)

--- PASS: TestDatabricks/Output_Validation/Private_Subnet_Range (0.00s)

--- PASS: TestDatabricks/Output_Validation/Public_Subnet_Range (0.00s)

--- PASS: TestDatabricks/Output_Validation/Workspace_URL (0.00s)

--- PASS: TestDatabricks/Key_Vault_Validation (91.14s)

--- PASS: TestDatabricks/Key_Vault_Validation/Key_Vault_Exists (0.00s)

--- PASS: TestDatabricks/Key_Vault_Validation/Key_Vault_Secret_Exists (0.55s)

--- PASS: TestDatabricks/Networks (9.94s)

--- PASS: TestDatabricks/Networks/Private_Subnet_Delegations (0.25s)

--- PASS: TestDatabricks/Networks/Public_Subnet_Delegations (0.68s)

PASS

ok test 917.058s

And that is the basics of running terratest locally on your machine!

Automated Test Execution#

Now we get to the really fun stuff, where we can get a CI/CD tool to run the tests for us! For this example I will be using GitHub Actions -which is always my CI/CD of choice- but you can use pretty much and tool you like!

For this I will take a procedural approach so that it is easy to follow.

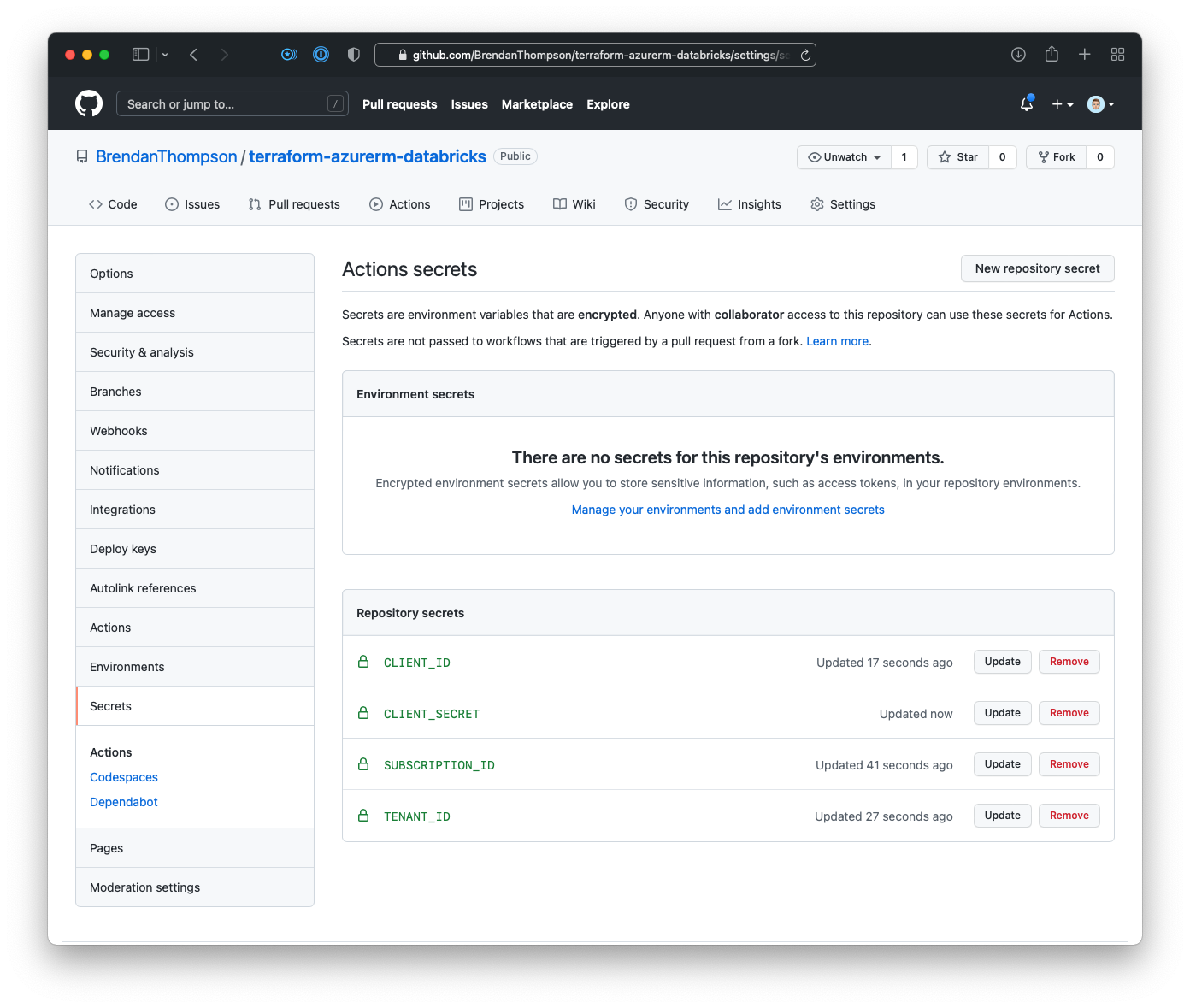

Goto your Terraform modules GitHub repository, from here we want to click on Settings

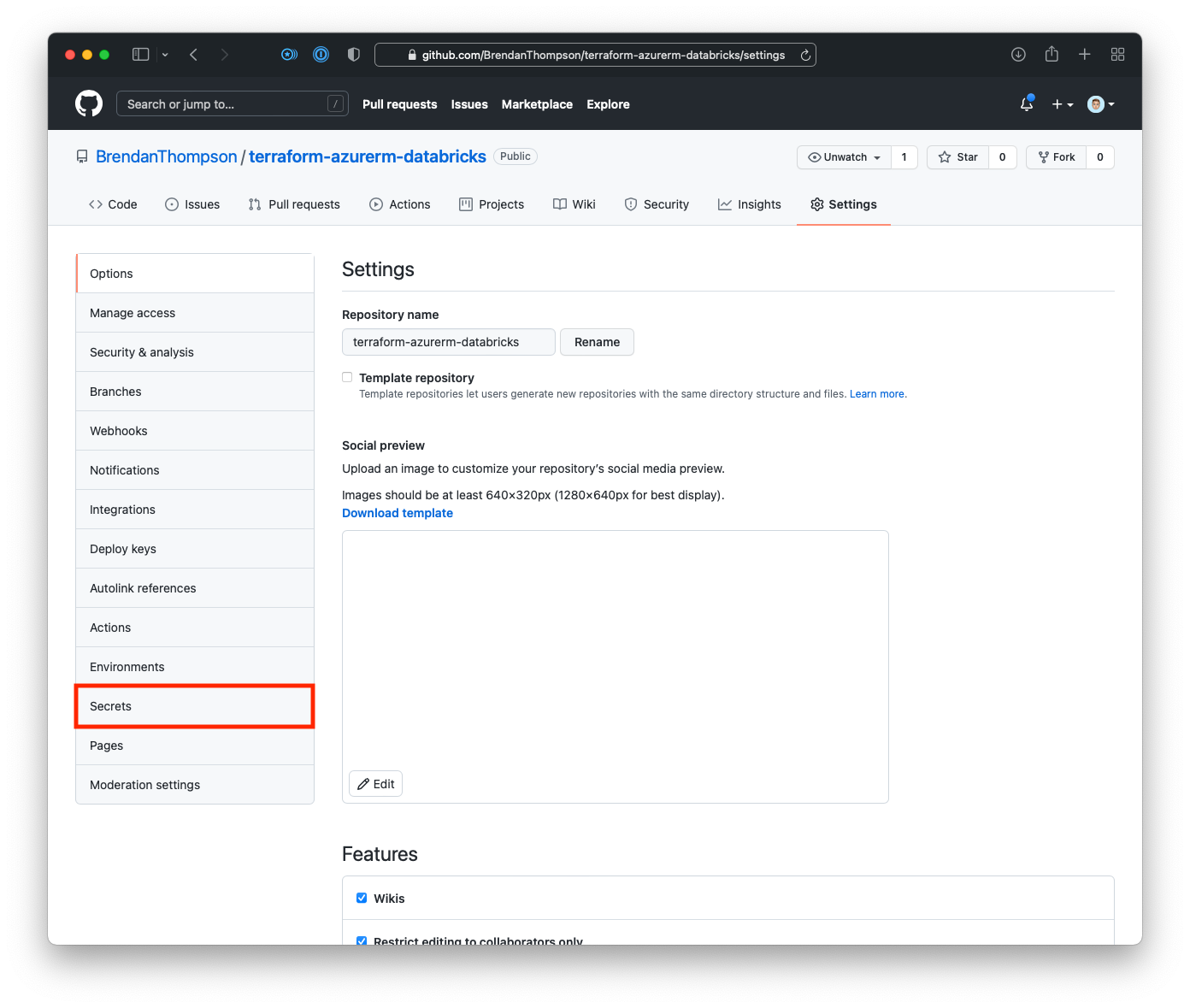

From here we want to click on Secrets in the left hand menu

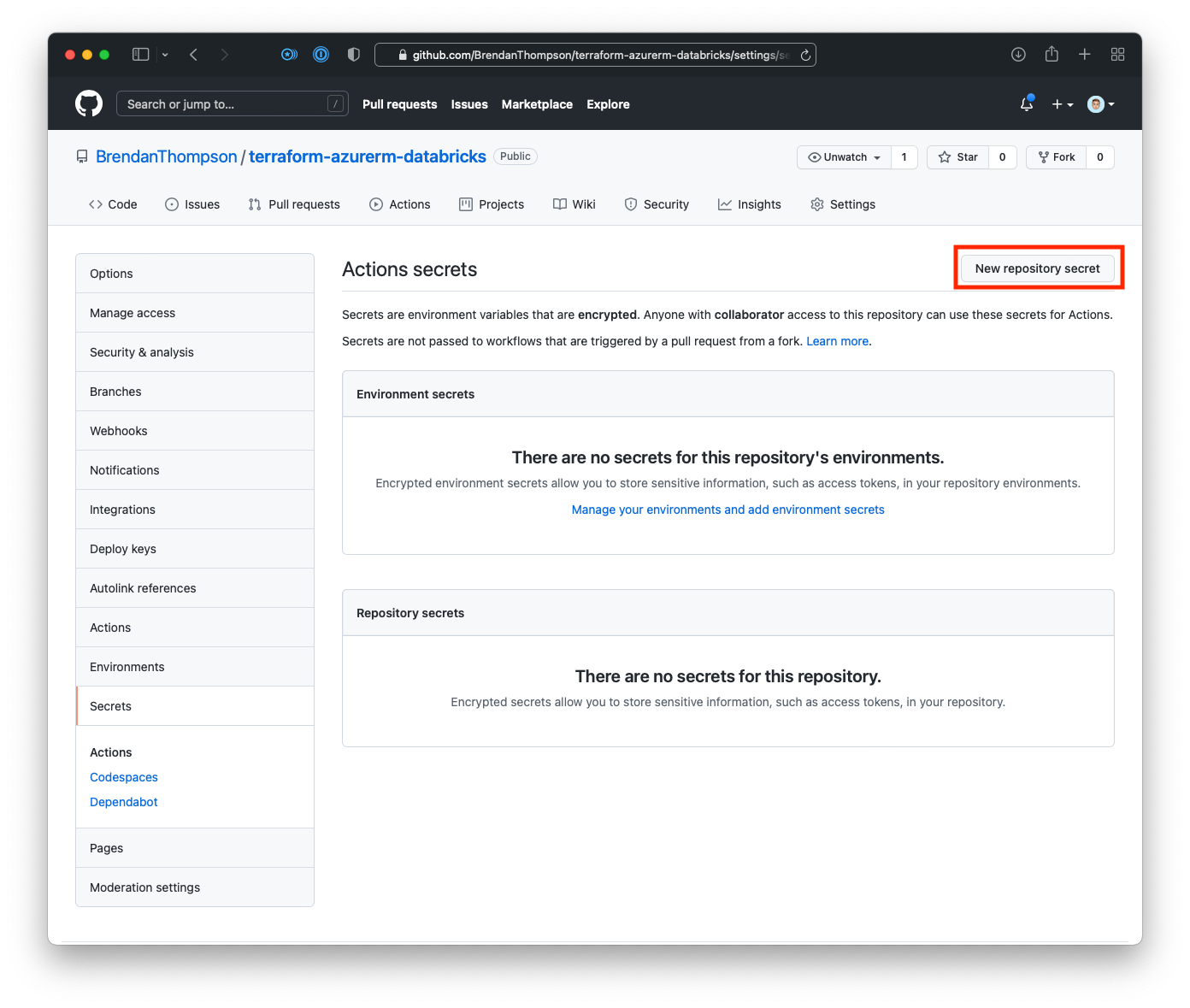

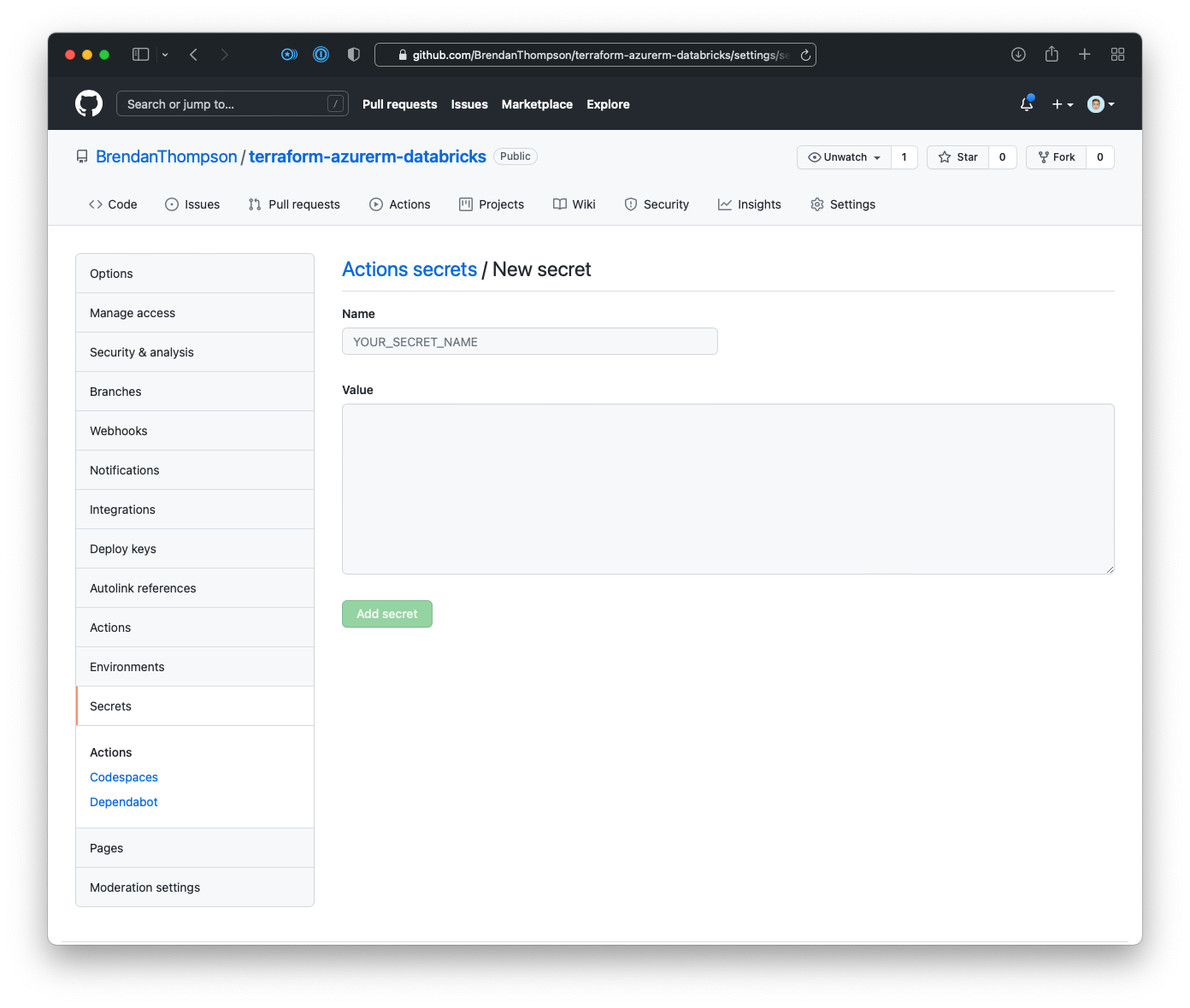

We will now be on the GitHub Secrets page, where we want to click New repository secret (we will need to repeat this step and the next four times to ensure we have all the required secrets)

On this screen put in the following secret names (and their respective values):

SUBSCRIPTION_IDTENANT_IDCLIENT_IDCLIENT_SECRET

Once all four of the secrets are in place the Secrets page should look like the below

Now we need to create the workflow file for unit testing, but before we do that we need to ensure from the root of the repository that the following directories exist, and if they do not you will need to create them:

.github/workflows

Once those directories exist we can create the workflow file, which we will call unit-testing.yaml

name: Unit Tests

on: push

jobs:

go-tests:

name: Run Unit Tests (terratest)

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v1

- uses: actions/setup-go@v1

with:

go-version: 1.17

- uses: hashicorp/setup-terraform@v1

with:

terraform_version: 1.0.2

- name: Download Go modules

working-directory: tests

run: go mod download

- name: Run tests

working-directory: tests

run: make unit-test

env:

ARM_SUBSCRIPTION_ID: ${{ secrets.SUBSCRIPTION_ID }}

AZURE_SUBSCRIPTION_ID: ${{ secrets.SUBSCRIPTION_ID }}

ARM_TENANT_ID: ${{ secrets.TENANT_ID }}

AZURE_TENANT_ID: ${{ secrets.TENANT_ID }}

ARM_CLIENT_ID: ${{ secrets.CLIENT_ID }}

AZURE_CLIENT_ID: ${{ secrets.CLIENT_ID }}

ARM_CLIENT_SECRET: ${{ secrets.CLIENT_SECRET }}

AZURE_CLIENT_SECRET: ${{ secrets.CLIENT_SECRET }}

This workflow is pretty basic, it is running the following steps:

- Checkout the current repository

- Setup our environment with the given version of

go - Setup out environment with the given version of Terraform

- Download any required

gomodules - Run our

Makefilewith theunit-testcommand

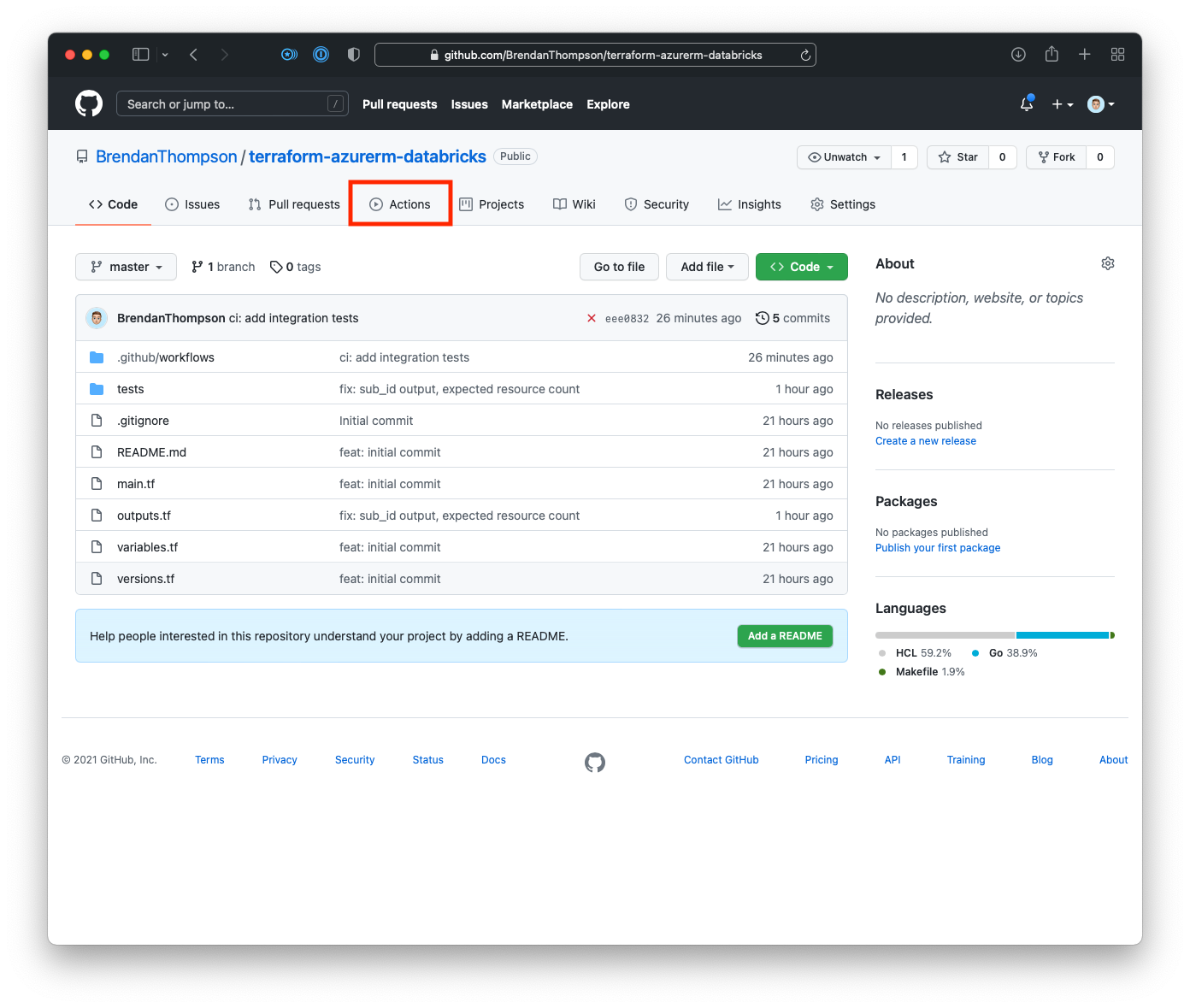

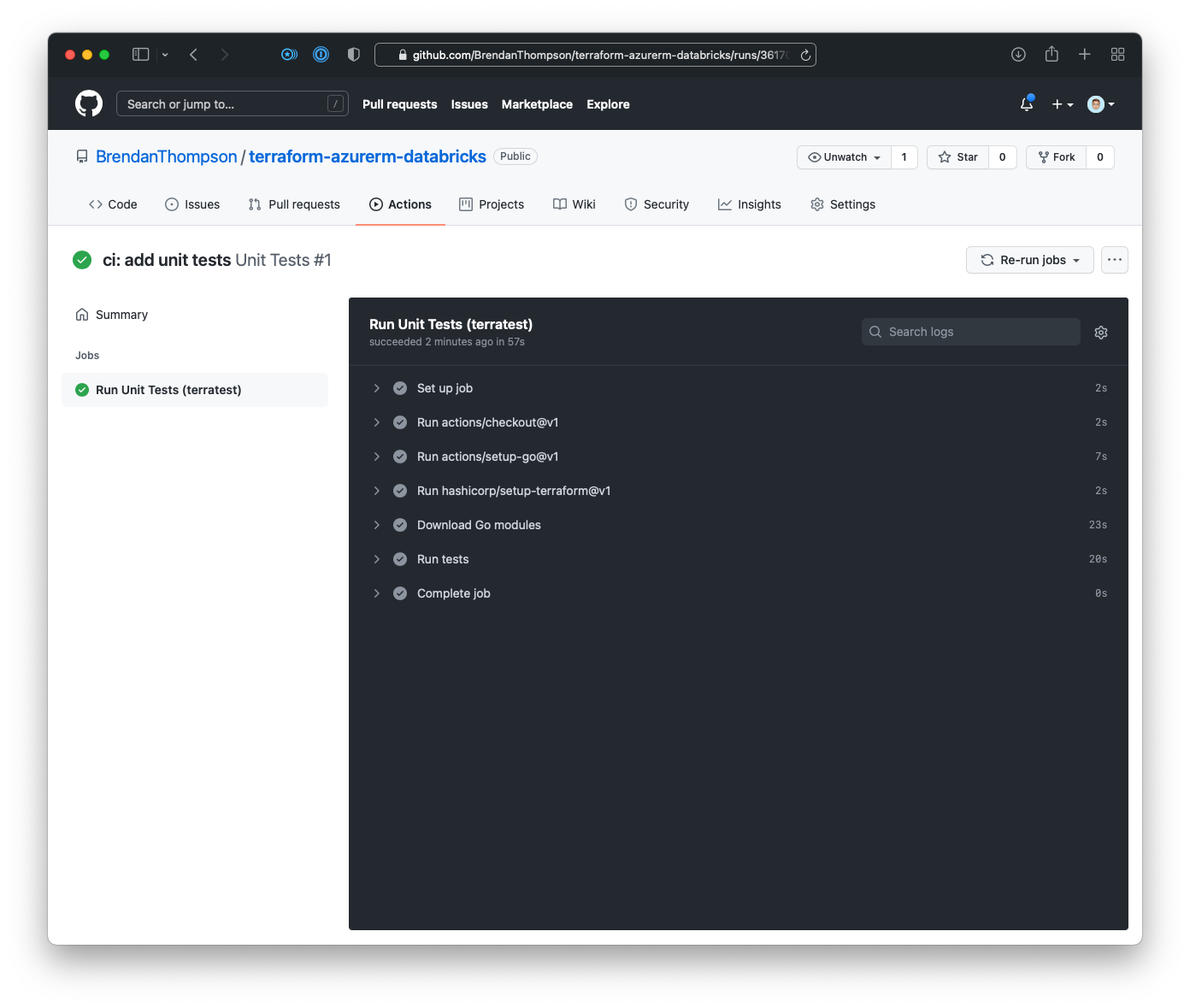

Once that workflow has been created and pushed to our repository we want to go and check the run status. For this, we need to click on Actions from the GitHub repository

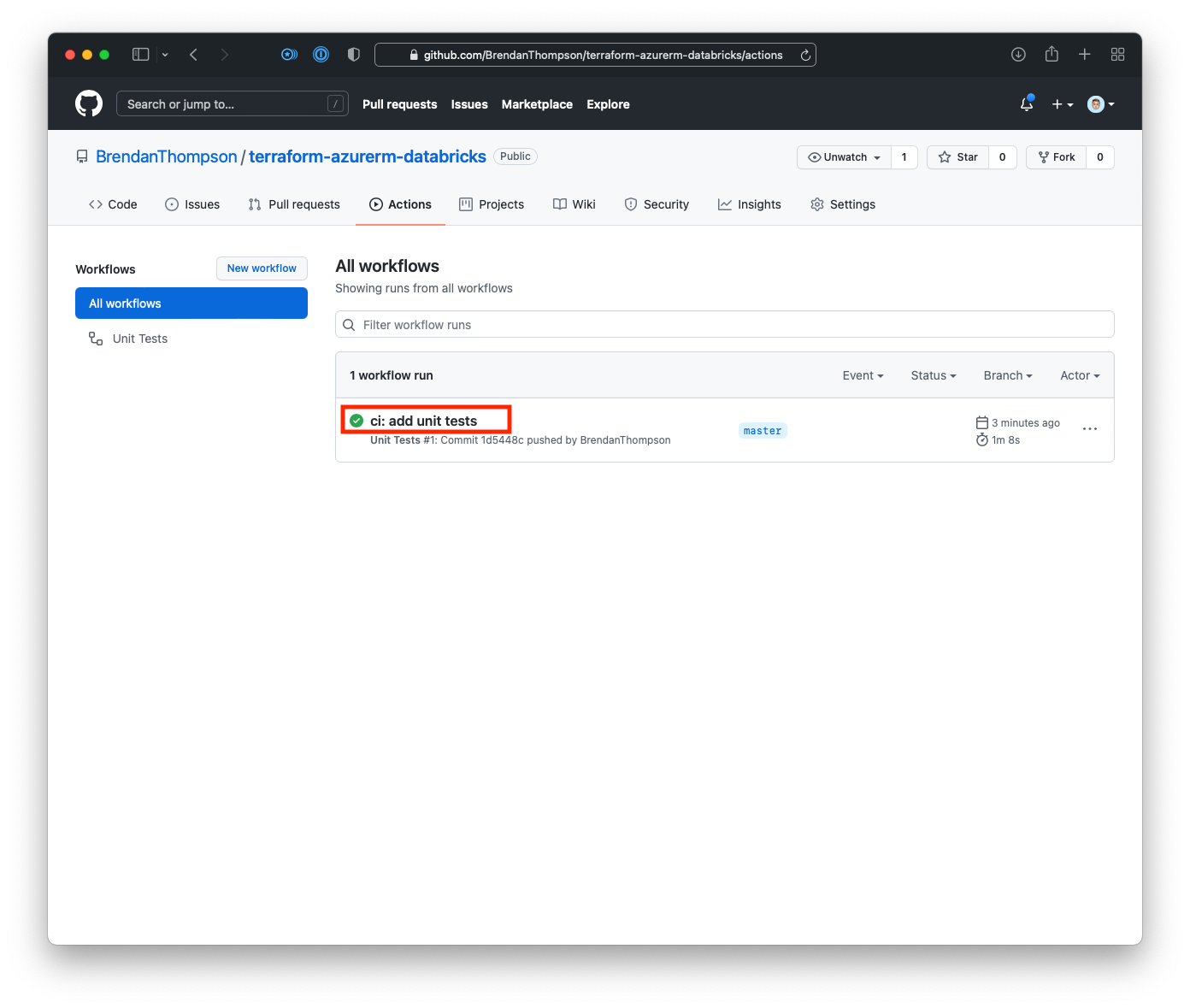

On the GitHub Actions workflow overview page click on the title of the latest run

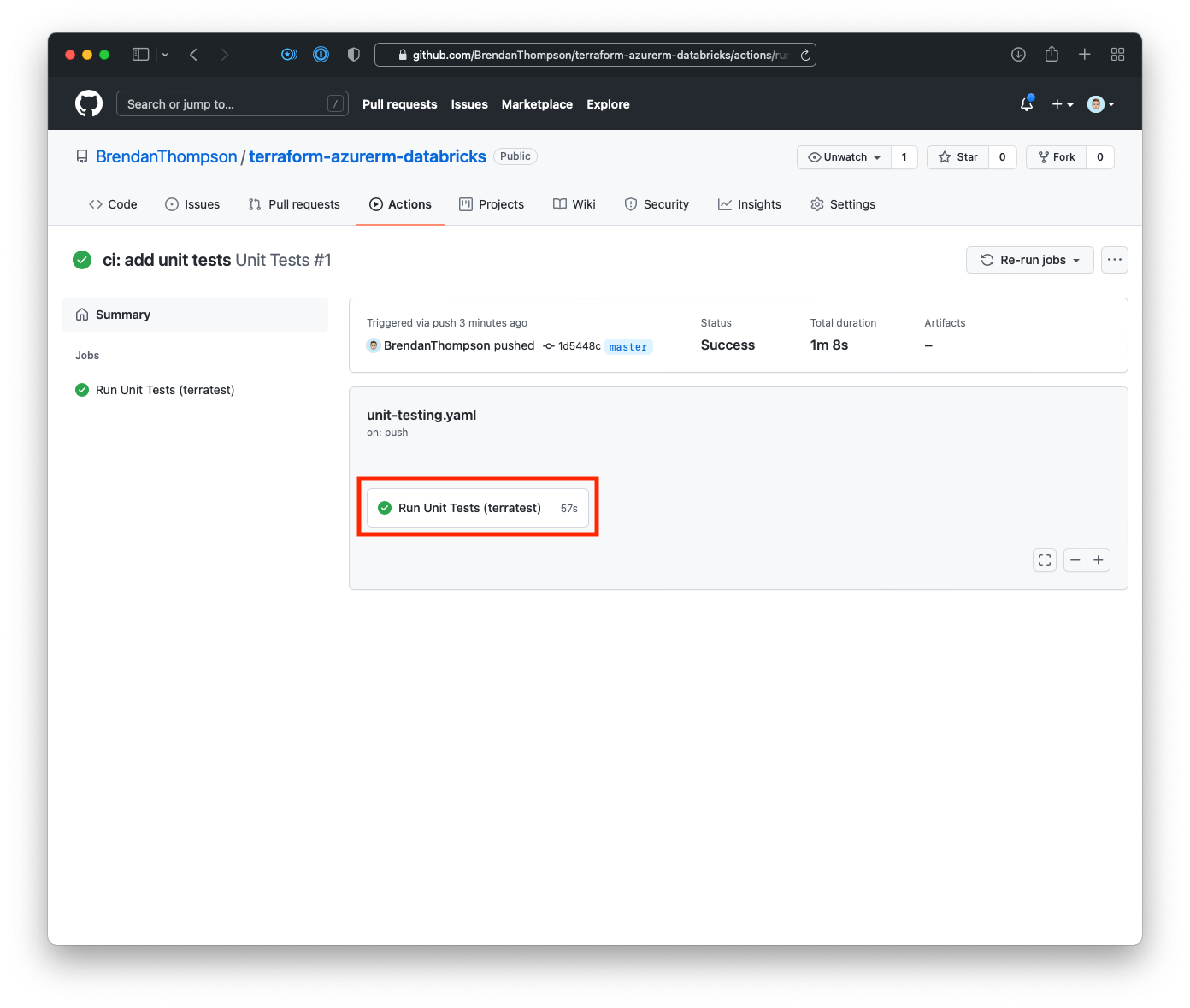

This takes us to the summary page for the workflow run, from here click on the step name Run Unit Tests (terratest)

Now we will head over to the run output page, where we can view the logs for the given run

This is especially valuable when something goes wrong and you need to debug your code.

- This process must be done again to create a workflow for our integration tests. First off go and

create an

integration-testing.yamlin the.github/workflowsdirectory, and put the following code in.

name: Integration Tests

on: push

jobs:

go-tests:

name: Run Integration Tests (terratest)

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v1

- uses: actions/setup-go@v1

with:

go-version: 1.17

- uses: hashicorp/setup-terraform@v1

with:

terraform_version: 1.0.2

terraform_wrapper: false

- name: Download Go modules

working-directory: tests

run: go mod download

- name: Run tests

working-directory: tests

run: make integration-test

env:

ARM_SUBSCRIPTION_ID: ${{ secrets.SUBSCRIPTION_ID }}

AZURE_SUBSCRIPTION_ID: ${{ secrets.SUBSCRIPTION_ID }}

ARM_TENANT_ID: ${{ secrets.TENANT_ID }}

AZURE_TENANT_ID: ${{ secrets.TENANT_ID }}

ARM_CLIENT_ID: ${{ secrets.CLIENT_ID }}

AZURE_CLIENT_ID: ${{ secrets.CLIENT_ID }}

ARM_CLIENT_SECRET: ${{ secrets.CLIENT_SECRET }}

AZURE_CLIENT_SECRET: ${{ secrets.CLIENT_SECRET }}

This workflow file is almost identical to our Unit Testing workflow, except we have added another

option to the hashicorp/setup-terraform action to disable the use of the terraform_wrapper. If

the wrapper is enabled you will likely see errors when it comes to validating Terraform outputs.

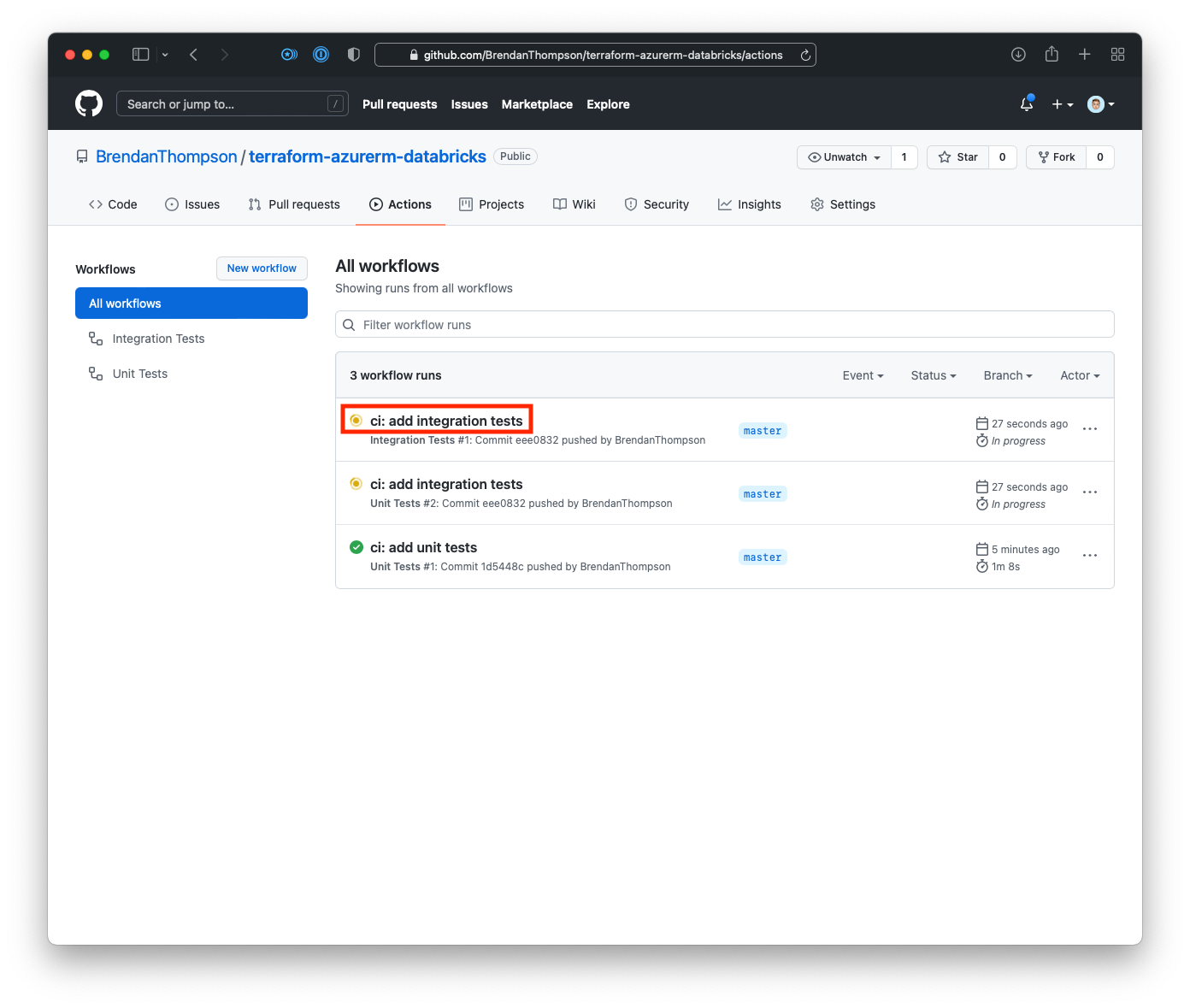

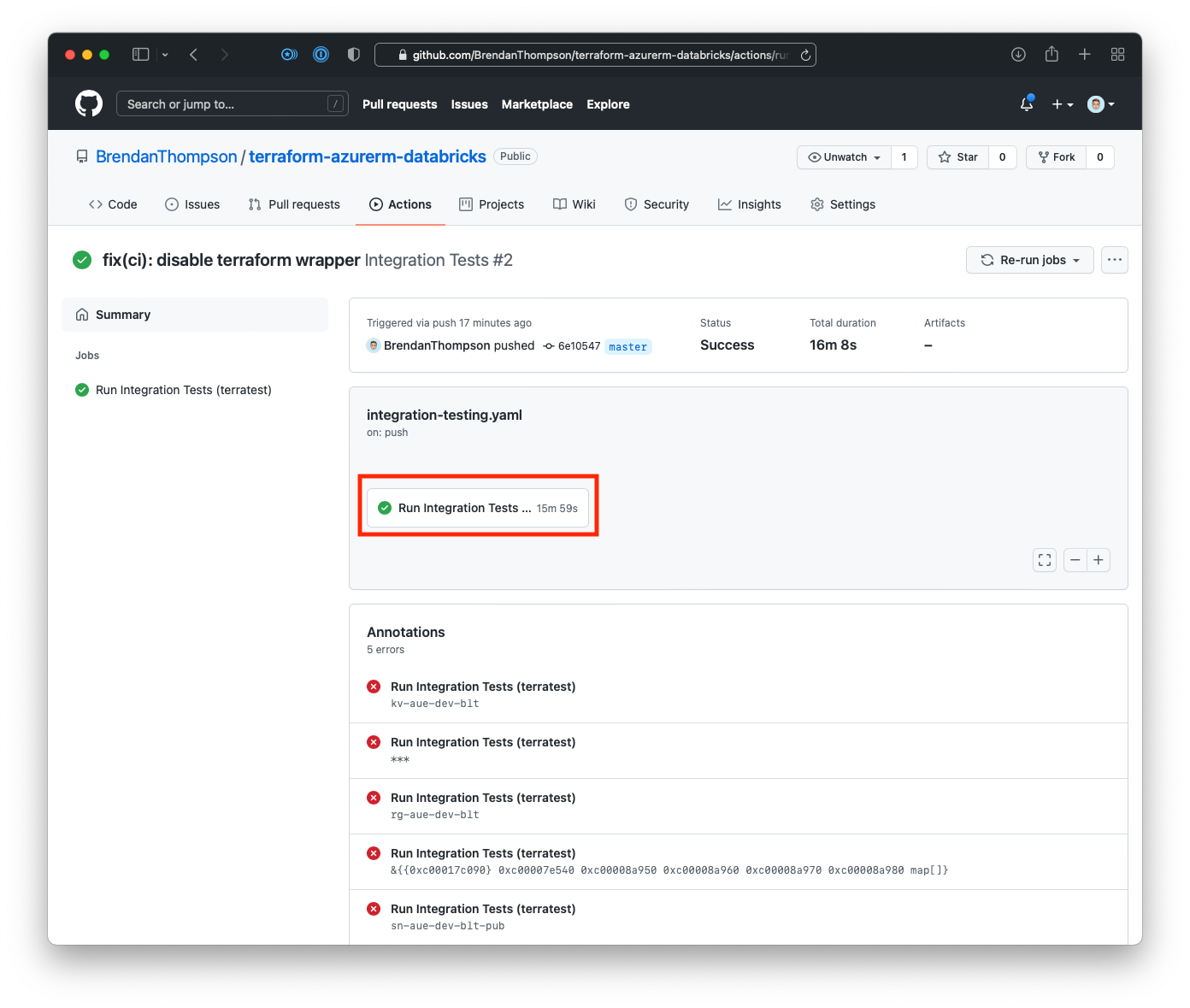

Time to go and check the run. If you head back to the GitHub Actions workflow overview page, and click on the title of the new integration test run

From here we click on the step name Run Integration Tests (terratest)

You will note however there are a few errors in the Annotations area. These are not actually

errors, they are the result of the calls to t.Log() in our test code. Ideally we should remove

those from the code.

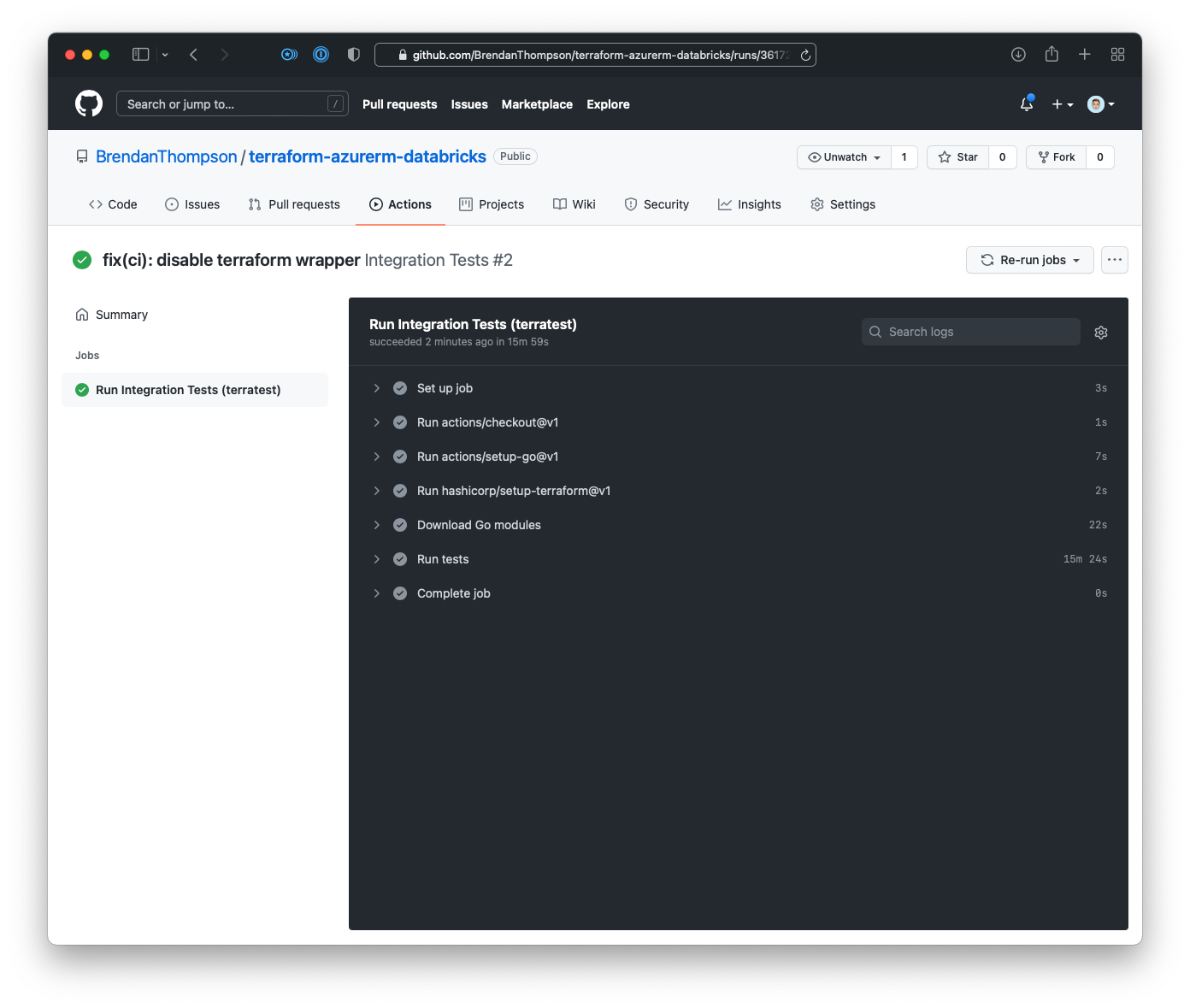

- Finally we are on the run output page where we can see the overall state of the run as well as

any logs that we might want to look at

And that is the end of how to setup GitHub Actions to automatically execute our tests! Hopefully this post has given you a place to get started with writing terratest, a lot of the things shown here could easily be applied to other clouds too. I always find the most difficult thing is trying to determine what you actually want to test, rather than writing the tests themselves. If there is anything you feel I haven't covered off on this post please feel free to reach out to me!

The workflow files themselves will execute any time a push to the repository is made, this is due to the

on: push line in the workflow file. If you're interested in learning more about the different triggers you can set for your workflow it would be a good idea to have a read of Workflow syntax for GitHub Actions - On.