Anatomy of a service running in Kubernetes on Azure

Brendan Thompson • 22 October 2021 • 7 min read

This post will take us on a journey of discovery, a journey where we look into what constitutes a Kubernetes Service, how that maps make to resources in Azure as well as the Kubernetes cluster on Azure itself using Azure Kubernetes Services.

First, let's define what a Service is; in the context of this blog post, it refers to more than just the Service resource type in Kubernetes. It is talking about an exposed application hosted within a Kubernetes cluster. We might have exposed our Service internally on an RFC-1918 address range, or it might be externally facing.

Azure Resources#

The first thing to talk about here is the Azure resources that will be deployed before we can use/consume our Kubernetes cluster. Azure has a few different ways that AKS can be deployed; for the most part, the differences relate to how secure the cluster is, where secure relates to network security. Typically I describe them as follows:

- Public Cluster – the Kubernetes API/Hosted control plane (HCP) is accessible via the internet,

and the cluster utilises

kubenetnetworking. There is no connectivity to any RFC-1918 address spaces. - Partial-private Cluster - the Kubernetes API/HCP is accessible via the internet. However, the cluster is using a Container Networking Interface (CNI) plugin. This plugin allows for access to internal networks via connection to an Azure virtual network.

- Private Cluster - the Kubernetes API/HCP is only accessible via an Azure virtual network typically connected back to your organisation's network; the cluster uses a CNI plugin.

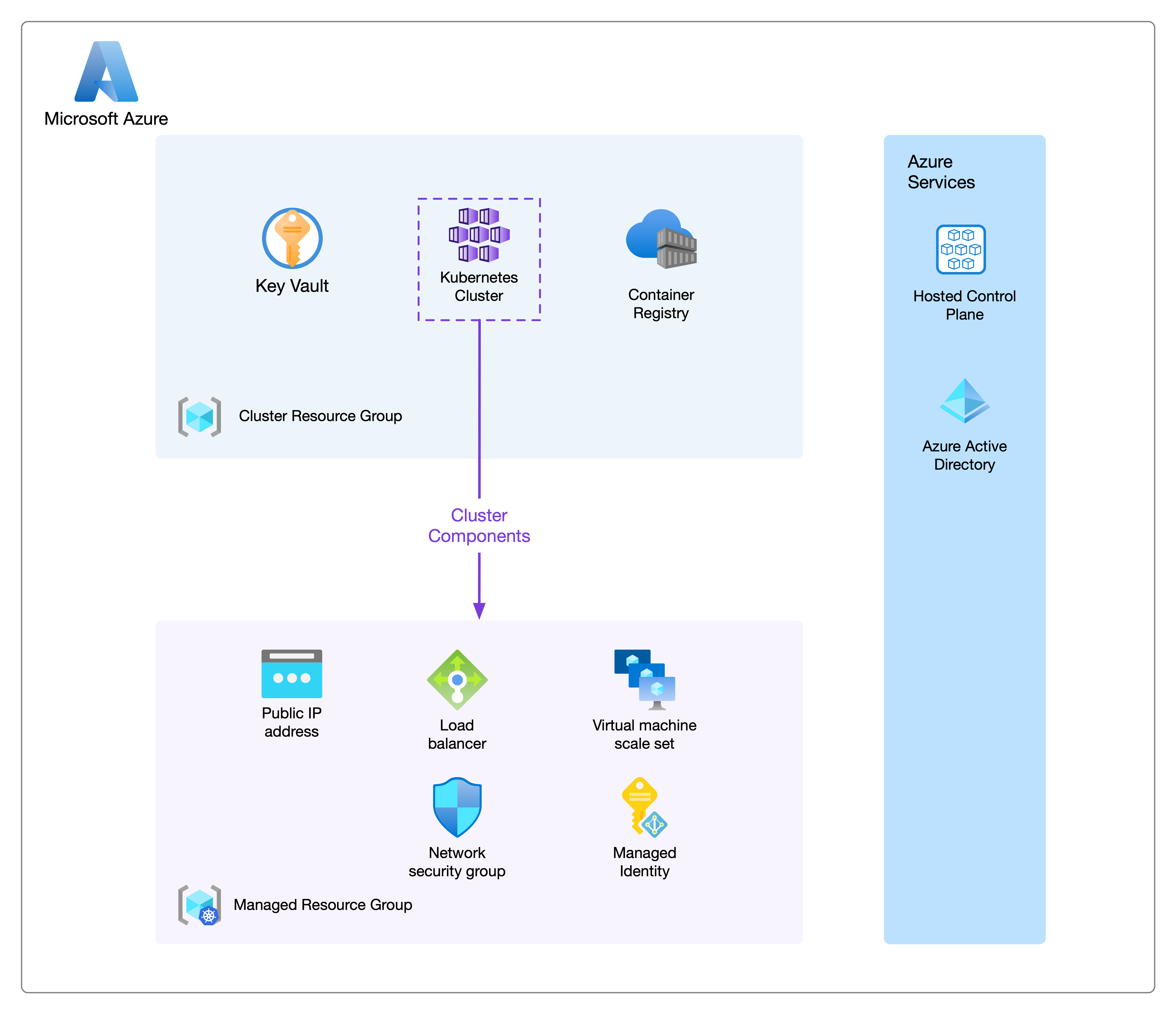

kubenet for both Partial-private and Private clusters I think you don't achieve the full potential of that level of privacy without using CNI. One of the main reasons to have clusters configured is to allow Pods access to internal resources and internal resources access to Kubernetes hosted services.In this instance, we are going to be talking about a Public Cluster implementation. As can be seen in the below diagram, we have three areas of interest to talk about.

- Cluster Resource Group - where we deploy the AKS Azure resource itself and any supporting infrastructure

- Managed Resource Group - where Microsoft deploys the backing infrastructure for the cluster

- Azure Services - Azure managed services such as the HCP and Azure Active Directory (AAD)

In the Cluster Resource Group, we have deployed Azure Kubernetes Services, Azure Key Vault (KV) and Azure Container Registry (ACR). These are services commonly used together on Azure when operating an AKS environment. The AKS instance would pull any internal/private container images from ACR. And, the KV would serve as a place to store and consume any secrets that our environment might need.

The Managed Resource Group contents will vary depending on which type of AKS is deployed. In

this instance, we will talk to a Public Cluster. The Resource Group itself is solely managed

by Microsoft and is typically named by prefixing it with MC_ then adding the

Cluster Resource Group name and the AKS instance name. To allow traffic to ingress into the

Cluster, a Load Balancer (LB) and Public IP (PIP) are both deployed here, as well as a Network

Security Group to manage ingress and egress traffic. The Kubernetes node pools can be either an

Azure Availability Set or a Virtual Machine Scale-set (VMSS), there can also be an

N-number of these node pools. We have a single VMSS backed node pool in this instance as we want

the pool to scale autonomously. The final piece to this managed puzzle is the Managed Identity -

this can also be a User Assigned Identity - which allows the Cluster and its resources to

authenticate back into Azure.

We also consume Azure Services, these are services provided by Azure as part of the platform, and they do not live within a Resource Group. The HCP, while part of the AKS resource, does not exist anywhere visible to us as consumers, and AAD allows users and services alike to authenticate to the Cluster.

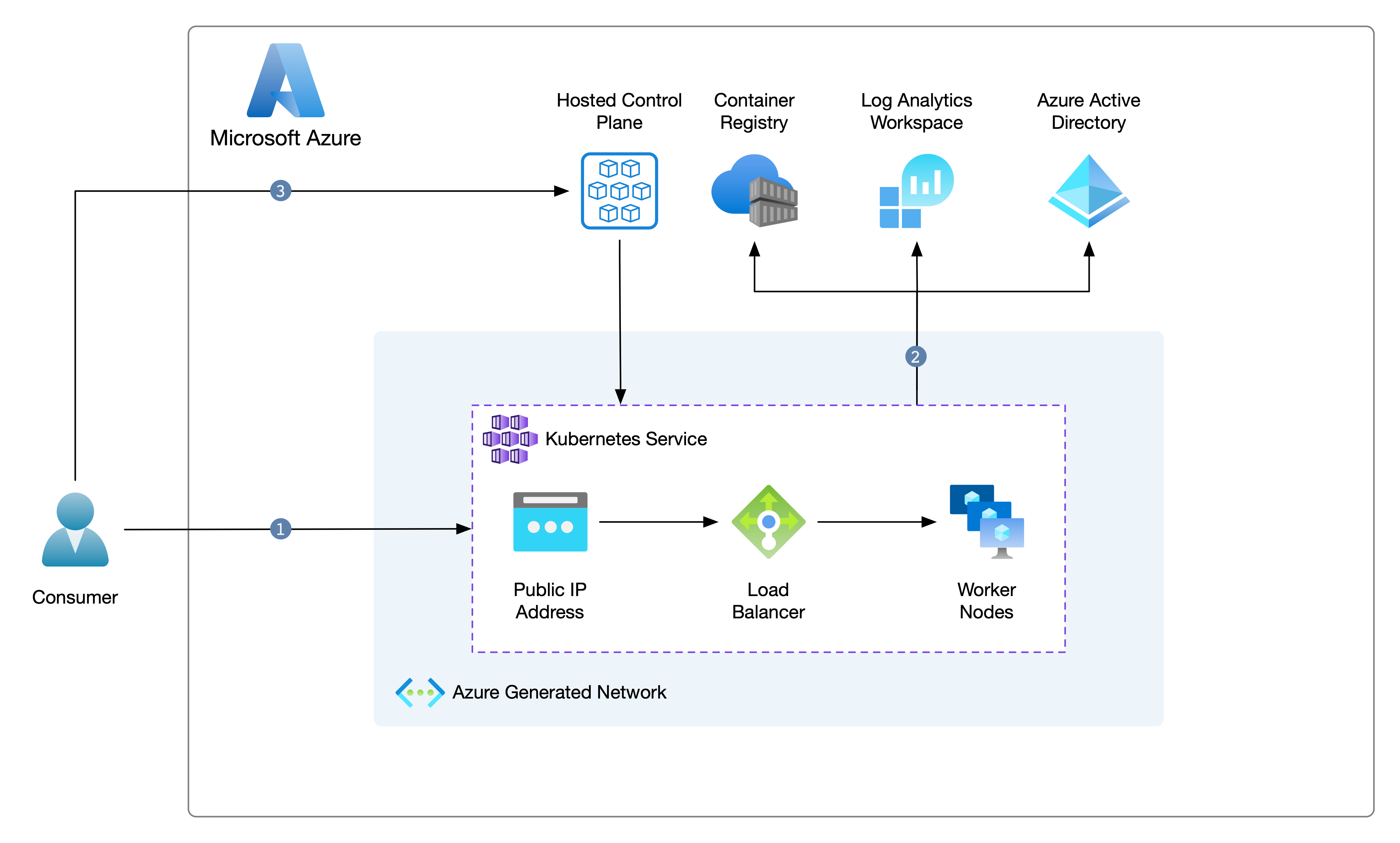

The following piece is how traffic flows into our cluster, whether Operator traffic or Consumer traffic. I quantify an Operator as someone who manages the Kubernetes cluster or the resources it contains, and a Consumer is someone who will consume an API or App hosted on our cluster.

Per the above diagram, there are 3 points marked out; we will go through each.

- A Consumer hits an API/App hosted on our cluster either via its public IP (PIP) address or - more likely - its DNS record. The traffic then transits through our LB before being directed to the relevant worker nodes where the Kubernetes Service(s) and Pods are deployed.

- The Cluster consumes Azure services through the Azure Fabric; these services are items such as Log Analytics or AAD.

- An Operator hits the HCP endpoint via its hostname and strictly over HTTPS to control resources deployed within the cluster.

Cluster Resources#

Now that we have our cluster deployed and demonstrated connectivity to the cluster, what do the resources look like inside the Kubernetes cluster itself?

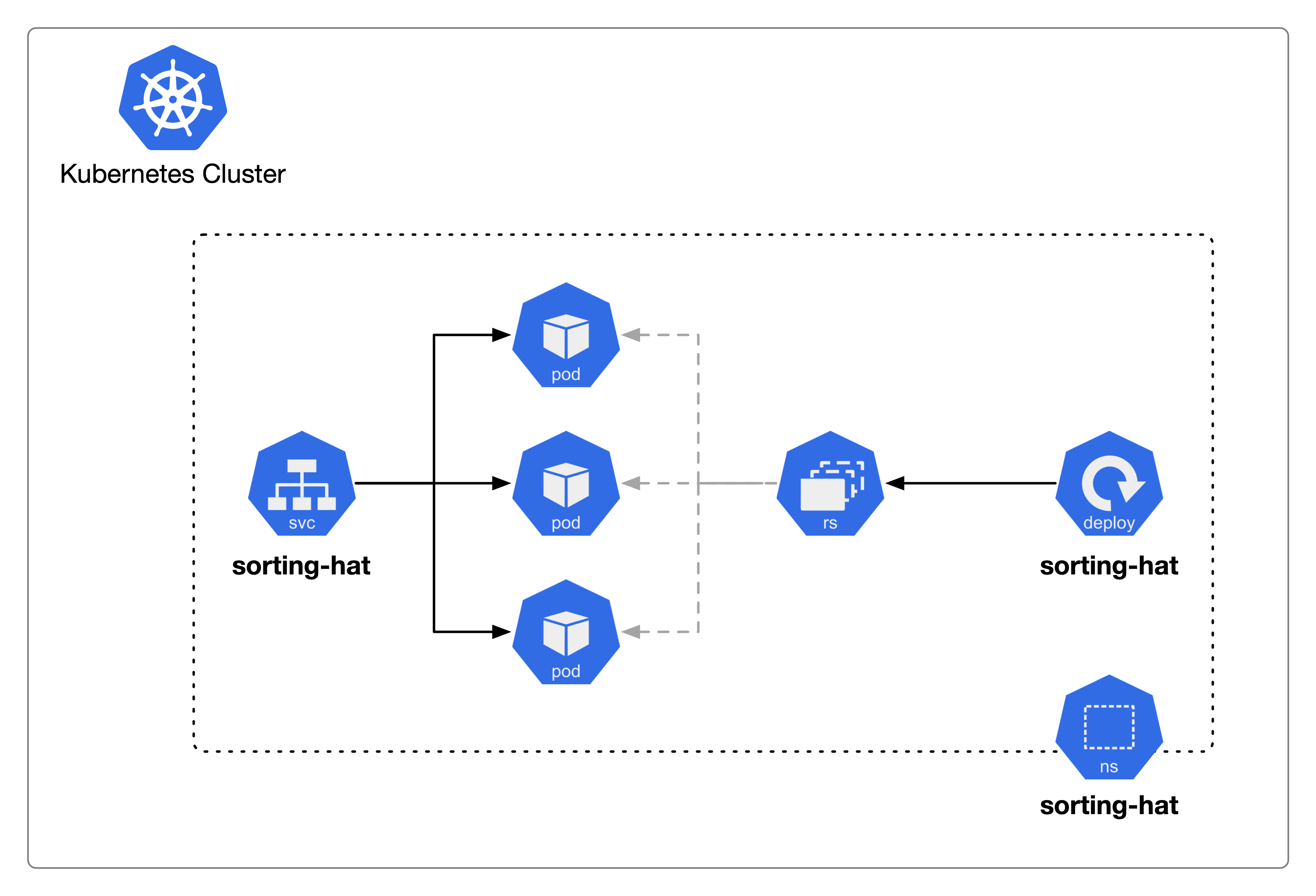

The example API that will be discussed here sorts Hogwarts students into one of the schoolhouses. This API contains a single container, and storage is static within the application.

At a high level, the Service is composed of the following elements:

- Namespace - a boundary for containing the other Kubernetes resources as well as a place to restrict networking and RBAC

- Deployment - provides declarative updates to Pods and ReplicaSets

- ReplicaSet - ensures that a specified number of Pod replicas are running at one time controls the availability of Pods

- Pods - the atomic unit of Kubernetes, this represents a set of running containers within a cluster

- Containers - lightweight and portable executable image that contains software and all of its dependencies

- Service - an abstract way to expose an application running on a set of Pods as a network service

Per the above, we have a Deployment that has instructed the ReplicaSet to ensure that 3 Pods are running on the cluster. In this instance, each of those Pods contains a single Container. The Kubernetes Service will allow access to these Pods over an exposed port. This port is defined in three places; Container, Pod, and Service.

There are many other components that Kubernetes offers to cater for myriad situations; however this covers off all the commonly used services to have a Service running inside of a Kubernetes cluster on Azure!

Next Steps#

If you're interested in learning how you could deploy a Kubernetes cluster in Azure then head over to my Kubernetes in Space - Azure post!